Beyond fashion: Deep Learning with Catalyst

Learn the basics of the Catalyst framework for deep and reinforced learning that promises to take the grunt work out of a data engineer’s daily job and put experimentation on the rails. Without a single Python loop in sight and with no Jupyter notebooks involved, we will take all the steps necessary to build, train, and deploy an image classifier for fashion items that will give predictions through HTTP API.

As advances in deep learning step out of the realm of academia—and into production, the need for a framework that “breaks the training cycle” is more palpable than ever. There is no shortage of powerful and well-respected tools in machine learning arsenal and no shortage of eureka moments. However, when it comes to developing a reproducible, maintainable, and production-ready pipeline, companies are mostly left to their own devices and rely on in-house solutions that rarely get open sourced.

The current state of affairs compares to how things were in web development in the early 2000s. Everyone on the market pretty much solved the same task—putting a layer of HTML between the HTTP request and a database—but rarely developers enjoyed it. Until Rails for Ruby, Django for Python, and other web frameworks came along.

There is no ultimate framework for deep learning yet, and Catalyst is still too early in development to be crowned as Rails or Django of data science. But it is certainly the one to watch. Here are my top reasons:

- The team behind Catalyst are professionals with extensive research and production experience. All core contributors are members of Open Data Science: the largest data science community in the world, with 42,000 active participants.

- It is rapidly developed in a true open source way: maintainers strive for test coverage, frequent refactoring, good OOP architecture, controlling the technical debt. New contributors are welcome, and pull requests usually get reviewed in a matter of days. The team is polite and open to new ideas.

- Reproducibility is high on the list of priorities: the difficulty to independently achieve the same results as a research paper is a big problem in our community. Catalyst solves it by storing the experiment code, configuration, model checkpoints, and logs.

- Catalyst’s system of callbacks makes it easy to extend any part of the pipeline with additional functionality without drastic changes to a codebase.

Catalyst is a part of the PyTorch ecosystem, so if you have any experience with PyTorch—you can start almost immediately. To use yet another software analogy, Catalyst for PyTorch is what Kubernetes is for Docker: taking a popular tool to a new level.

The Kubernetes analogy does not end here, as Catalyst decided to build its Config API around YAML. That makes the tedious task of adjusting training configuration open for collaboration, reuse, and documentation, allowing to reach for the best result in a professional team environment.

This tutorial assumes basic knowledge of tools and concepts in deep learning. Feel free to read my previous article, “Learning how to learn deep learning”, to set yourself on the right path.

To follow the tutorial, you will need a recent version of Python and a pip package manager. We will try to walk you through every line of code and explain the project organization as we go.

Even if your experience with deep learning is minimal—you can still benefit from this tutorial, as you will be able to study each piece of the pipeline separately and learn best practices for organising machine learning code.

You will also be able to reuse the final pipeline on a different dataset and show off your result through a working web API.

Another reason to follow this tutorial is that, due to its young age, Catalyst has not yet grown decent documentation around itself: this text is our attempt to make an accessible introduction to the framework.

ToC

- Digits out, trouser in

- Step O: Requirements

- Step 1: Dataset

- Step 2: Model

- Step 3: Experiment

- Step 4: Training configuration

- Step 5: Training the model

- Step 6: Inference

- Step 7: Production

Digits out, trousers in

Anyone who has ever tried to play around with machine learning must have heard about the MNIST database: the mother of all datasets. It contains 70,000 images of handwritten digits scribbled by American high school students and American Census Bureau employees, shrunk into boxes of 28 by 28 pixels.

The problem with this dataset is that it’s been around for a while and became too easy even for common machine learning algorithms: classic solutions achieve 97% accuracy on MNIST, and modern convolutional nets beat it with 99,7%. It is also vastly overused, and some experts argue that it does not represent modern computer vision tasks anymore.

Luckily, data scientists from Zalando, the fashion and lifestyle e-commerce giant, have come up with a drop-in replacement for MNIST by keeping the original data format and substituting scanned digits by the real-world pictures of fashion items: T-shirts, trousers, sandals, bags, and such. Perfect for us, as we want our Catalyst deep learning pipeline to sweat a bit more. Welcome, Fashion-MNIST!

Fashion-MNIST Dataset

Both MNIST and Fashion-MNIST store sample images in a specific binary format, so we need to do some byte-wrangling to convert it to a more common PNG. Nothing a short Python script can’t handle. All that is left for you to do is to download and unzip the images. Do it from the terminal in the root of the folder where we are going to keep our project.

mkdir catalyst-fashion && cd $_

# Download transformed dataset

wget https://github.com/DeepLenin/fashion-mnist_png/raw/master/data.zip

# Extract images into a data directory

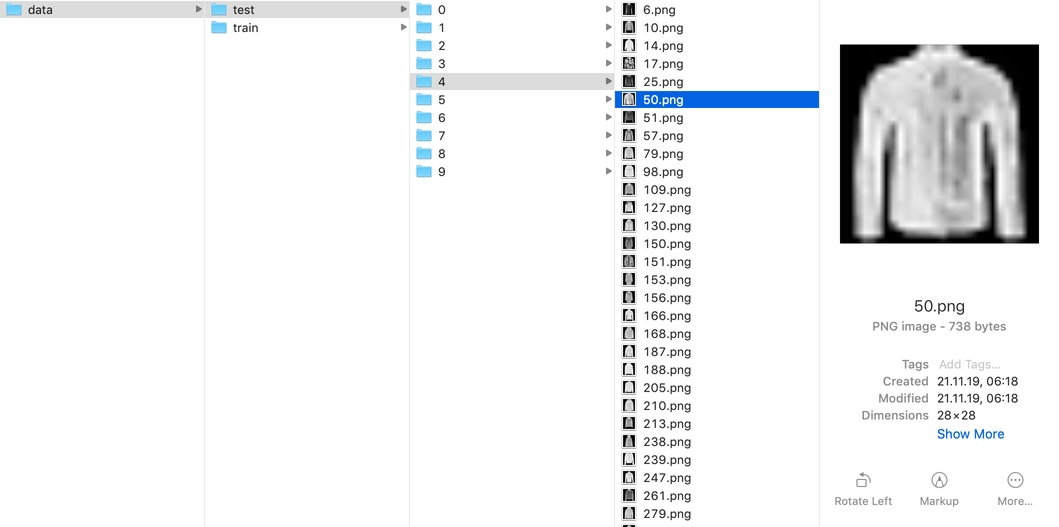

unzip data.zipThe resulting data directory has a conventional layout: train and test subdirectories, each containing respective datasets organized by labels as per the Fashion-MNIST description, where 0 is “T-Shirt,” and 9 is “Ankle boot,” with other categories of items in between.

tree -d data

data

├── test

│ ├── 0

│ ├── 1

│ ├── 2

│ ├── 3

│ ├── 4

│ ├── 5

│ ├── 6

│ ├── 7

│ ├── 8

│ └── 9

└── train

├── 0

├── 1

├── 2

├── 3

├── 4

├── 5

├── 6

├── 7

├── 8

└── 9There are 60,000 images inside the train folder and 10,000 images inside the test. Folder names are self-explanatory: one is used to train our algorithm, another—to test its performance.

Extracted images

Step 0: Requirements

After we are done with images, it is finally time to start writing code. First things first, we would need to install project dependencies: in our case, they will be different for development and production, so we start with a local one. Create the local_requirements.txt file in the root of your project folder:

catalyst==20.2.4

numpy>=1.17.4

opencv-python>=4.0

torch>=1.3.1

albumentations==0.4.3

fastapi>=0.43.0

uvicorn>=0.10.8Now run pip install -r local_requirements.txt to install dependencies locally.

Step 1: Dataset

Catalyst follows the convention-over-configuration principle. All it expects from us as pipeline designers is organize our work in a certain way. Catalyst will take care of the rest, and you’ll be able to run and reproduce your experiments without the cognitive overhead of “where do I put stuff?” or the need to write a single-script code full of loops in a Jupyter notebook.

Don’t get me wrong, notebooks are perfect for demonstration purposes, but they quickly become unwieldy when we want to iterate fast, use source control to its fullest, and roll out models for production.

First, let’s create an src folder where we are going to store the main elements of our pipeline and create our first Python file inside: dataset.py

# make sure you are in catalyst-fashion folder. If not, cd there

pwd

mkdir src && touch src/dataset.pyCatalyst works naturally with PyTorch’s Dataset type, so we will need to use it as our base class and override a couple of methods to tailor functionality to our use case.

We will also need to import the cv2 package to use methods from the OpenCV computer vision library and deal with images as with NumPy arrays, so we will also need to import numpy. Let’s get started:

# src/dataset.py

import cv2

import numpy as np

import torch

from torch.utils.data import Dataset

CLASSES = {

0: "T-shirt/top",

1: "Trouser",

2: "Pullover",

3: "Dress",

4: "Coat",

5: "Sandal",

6: "Shirt",

7: "Sneaker",

8: "Bag",

9: "Ankle boot"

}

class MNISTDataset(Dataset):

def __init__(self, paths: list, mode: str, transforms=None):

self.paths = paths

assert mode in ["train", "valid", "infer"], \

"Mode should be one of `train`, `valid` or `infer`"

self.mode = mode

self.transforms = transformsHere, we created a dictionary of human-readable names for our classification labels and inherited our MNISTDataset class from PyTorch’s built-in Dataset. We also overrode the __init__ constructor so that our class can store attributes required for our use case:

paths—a list of file paths to our images.mode—usually, deep learning pipelines work with three dataset types: train, valid, and infer. train and valid datasets will be used during our training, train—to feed data to the algorithm, and valid to check how it performs. In both cases, we’ll be getting an item, transforming it somehow (to prevent overfitting and increase stability), and returning it with a corresponding ground-truth label. By contrast, infer is the dataset upon which our trained model will perform predictions: items from infer won’t come bundled with category labels (they are for a machine to guess).transforms—a list of transform objects from albumentations library. We might need transformations (flip, scale, etc.) while we train the model, but we won’t use them during the inference step.

Now we need to override the __len__ method—so our dataset “knows” how many items it contains:

# src/dataset.py

# ... continued from the example above.

# Adjust the indentation accordingly when copying and pasting!

def __len__(self):

return len(self.paths)Finally, let’s move to the most interesting bit, overriding the __getitem__ method. Most of the Catalyst’s criteria—loss functions used to measure the performance of the model during training—would expect a dictionary with “features” and “targets” keys for an item at a given index. “features” will contain a tensor with item’s features at a given stage, and “targets” will contain the item’s label. We only provide “targets” key for training and validation steps: during the inference step, the target will have to be inferred from the features by the algorithm.

Here’s the implementation of our __getitem__

# src/dataset.py

# ... continued from the example above.

# Adjust the indentation accordingly when copying and pasting!

def __getitem__(self, idx):

# We need to cast Path instance to str

# as cv2.imread is waiting for a string file path

item = {"paths": str(self.paths[idx])}

img = cv2.imread(item["paths"])

if self.transforms is not None:

img = self.transforms(image=img)["image"]

img = np.moveaxis(img, -1, 0)

item["features"] = torch.from_numpy(img)

if self.mode != "infer":

# We need to provide a numerical index of a class, not string,

# so we cast it to int

item["targets"] = int(item["paths"].split("/")[-2])

return itemNow compare your dataset.py with our example implementation to make sure nothing is missed. Time to move to the next step!

Step 2: Model

Another advantage of Catalyst is that it does not require you to unlearn concepts you have already mastered: it’s a glue that holds familiar blocks together. Image classification is a task at which convolutional neural networks shine, so we are going to code a fairly standard CNN using PyTorch’s nn.Module.

I will not be getting into details of how CNNs work, so readers who are just starting on a deep learning path are welcome to dig into resources that I listed in my “Learning how to learn Deep Learning” article.

Let’s create a model.py file in our src folder…

touch src/model.py…and open it in our editor. Let’s start with the imports:

# src/model.py

from torch import nn

import torch.nn.functional as F

class MNISTNet(nn.Module):

# Implementation to followAs you can see, we haven’t mentioned any of Catalyst’s classes neither in a Dataset class nor in our model. It’s perfect for “catalyzing” your existing deep learning code. And later, when we will use our model in production, we will not import Catalyst at all, to save ourselves some precious space.

Our model is just a subclass of torch.nn.Module, we have to override just a couple of methods for our implementation: a constructor, where we are going to describe our model’s layers, and a forward method where we take the input, put it through our layers one by one, and return an output. Our model will have two 2-dimensional convolutional layers, and two fully-connected linear layers. We are using nn.Conv2d and nn.linear classes from PyTorch to initialize layers.

# scr/model.py

# ... continued from the example above.

def __init__(self, num_classes):

super().__init__()

self.conv1 = nn.Conv2d(3, 20, kernel_size=5, stride=1)

self.conv2 = nn.Conv2d(20, 50, kernel_size=5, stride=1)

self.fc1 = nn.Linear(4 * 4 * 50, 500)

self.fc2 = nn.Linear(500, num_classes)Besides connecting together layers desribed in __init__, we are also adding a non-linear activating function F.relu and a pooling function F.max_pool2d that both come with PyTorch. Here’s the result:

# scr/model.py

# ... continued from the example above.

def forward(self, x):

x = F.relu(self.conv1(x))

x = F.max_pool2d(x, 2, 2)

x = F.relu(self.conv2(x))

x = F.max_pool2d(x, 2, 2)

x = x.view(-1, 4 * 4 * 50)

x = F.relu(self.fc1(x))

x = self.fc2(x)

return xCheck your model code against our repo and let’s move to step three!

Step 3: Experiment

Now that we have our dataset and our model set in code—we can finally start cooking with gas Catalyst! In Catalyst’s terms, the experiment is where our models and datasets come together; you can think of it as of a Controller in MVC pattern. A Catalyst experiment is a way to abstract out the training loop and rely on callbacks (a lot of them come out of the box with the framework) for common operations: like measuring accuracy and applying optimizations. The state of your experiment is automatically relayed between training steps so you can focus on fine-tuning the performance of your model instead of messing with loops in code.

There are more than a few base classes for experiments in Catalyst, but today we will deal only with the ConfigExperiment: it gives us access to default callbacks to execute code on different stages of the training cycle, and allows us to feed all the training parameters as a configuration YAML file (hello, Kubernetes).

Let’s create the src/experiment.py file and start putting in its contents:

# src/experiment.py

import random

from pathlib import Path

import albumentations as A

from collections import OrderedDict

from catalyst.dl import ConfigExperiment

from .dataset import MNISTDataset

class Experiment(ConfigExperiment):

# Implementation to followHere, we will have to deal with two static methods: get_transforms and get_datasets. The first one, get_transforms, will tell our experiment which transformations need to be applied to dataset items. For simplicity’s sake, we will only use the Normalize transform from the albumentations package: it normalizes image pixel values from integers between 0 and 254 to floats around zero.

# src/experiment.py

@staticmethod

def get_transforms():

return A.Normalize()The code for get_datasets is much more interesting, so I will put it here in full and then decsribe the steps taken:

# src/experiment.py

def get_datasets(self, stage: str, **kwargs):

datasets = OrderedDict()

data_params = self.stages_config[stage]["data_params"]

if stage != "infer":

train_path = Path(data_params["train_dir"])

imgs = list(train_path.glob('**/*.png'))

split_on = int(len(imgs) * data_params["valid_size"])

random.shuffle(imgs)

train_imgs, valid_imgs = imgs[split_on:], imgs[:split_on]

datasets["train"] = MNISTDataset(paths=train_imgs,

mode="train",

transforms=self.get_transforms())

datasets["valid"] = MNISTDataset(paths=valid_imgs,

mode="valid",

transforms=self.get_transforms())

else:

test_path = Path(data_params["test_dir"])

imgs = list(test_path.glob('**/*.png'))

datasets["infer"] = MNISTDataset(paths=imgs,

mode="infer",

transforms=self.get_transforms())

return datasetsThe first thing to notice is that data_params is a dictionary that will be populated from our configuration YAML. More on that later.

Code in the if statement decides how we are performing the Train/Test splitting. Usually, data scientists will use methods from scikit-learn arsenal for that, but to reduce the number of dependencies, I went for a hand-rolled solution. The idea is to shuffle contents of a given folder with sample images up, and take the first valid_size * 100% (valid_size will also come from YAML) items from a list as a training dataset, and the rest as the validation dataset.

In case we are using a dataset for our inference stage—we will just take all the pictures from a given set. Spoiler: we will use the contents of our data/train directory for train and valid, and contents of data/test for infer.

That’s all the code for src/experiment.py! Be sure to cross-check with the source repo.

Just a couple of final touches before we are ready to define our YAML config and run the training: first, we would need to create an __init__.py file in our src folder that will tie the pieces together.

# src/__init__.py

from catalyst.dl import SupervisedRunner as Runner

from .experiment import Experiment

from .model import MNISTNet

from catalyst.dl import registry

registry.Model(MNISTNet)And, finally, let’s add .gitignore to the project root and throw this inside:

# .gitignore

data/

__pycache__/

logs/We will use git later in the tutorial to deploy our production model to a dyno on Heroku, and we don’t want to accidentally send the whole dataset, as well as the files generated by Catalyst, into the cloud.

Now we can run git init in the terminal while in the root of a project to start a local repo.

Step 4: Training configuration

Let’s get back to our terminals and create a configs directory next to our src.

mkdir configs && touch configs/train.ymlNow let’s define the configuration file for our training stage. The full list of available settings can be found in the catalyst-team/catalyst repo on GitHub. We will not use all of them in this tutorial, just the most important ones.

# config/train.yml

model_params:

model: MNISTNet

num_classes: 10

args:

expdir: src

logdir: logs

verbose: True

# ...TBC...The first key in YAML is used to pass arguments to the model’s initializer. As our MNISTNet class needs a num_classes as a single argument—we provide it here. More arguments—more keys.

The second key allows us to set flags that will be fed to catalyst-dl run CLI executable: where the logs and checkpoints for trained models will be exported, where the __pycache__ files will be generated (that is why we added them to .gitignore earlier), and control the verbosity of logging.

The most interesting (and powerful) section of our YAML is stages. Here’s the code in full:

# config/train.yml

# ...continued from above

stages:

data_params:

batch_size: 64

num_workers: 0

train_dir: "./data/train"

valid_size: 0.2

loaders_params:

valid:

batch_size: 128

state_params:

num_epochs: 3

main_metric: accuracy01

minimize_metric: False

criterion_params:

criterion: CrossEntropyLoss

optimizer_params:

optimizer: Adam

callbacks_params:

accuracy:

callback: AccuracyCallback

accuracy_args: [1, 3]

stage1: {}Let’s take time to understand each key under stages in some details:

data_params

data_params:

batch_size: 64

num_workers: 0

train_dir: "./data/train"

valid_size: 0.2

loaders_params:

valid:

batch_size: 128-

The

num_workerskey has to do with the number of parallel processes for PyTorch’s DataLoader that Catalyst will utilize to batch-load data from Dataset we defined earlier. There is no default value for this key, so if you don’t want your experiment to fail with an error, you have to setnum_workersto at least0(meaning, only the main process will be used). -

We don’t want to leave

batch_sizeunattended either, as the default batch size for PyTorch’sDataLoaderis 1, and it means we will only feed images from dataset to algorithm one at a time. That is not what we want, especially with a small number of features our 28 by 28 PNGs possess. Let’s set the batch size for our experiment to 64. -

We are using values of

train_dirandvalid_sizeinside the code for ourExperimentto determine where to get images from and how to split them. -

If we want, we can change the batch size for a particular loader inside the optional

loader_paramsYAML key. In our example, we will increase the batch size for the loader responsible for loading the “valid” dataset to 128. That is yet another example of convention-over-configuration in Catalyst: we don’t have to write any of the loaders ourselves, Catalyst uses native PyTorch classes and applies settings based on the key names we chose for ourdatasetsordered dictionary insidesrc/experiment.py.

state_params

state_params:

num_epochs: 3

main_metric: accuracy01

minimize_metric: Falsenum_epochs—number of epochs to run in all the stages. Three is a lucky number.main_metric—by default, Catalyst will use “loss” as the main metric during the training stage to elect the best performing combination of model weights (called a checkpoint). To show that we can use a different metric if needed, here we replace loss for “Top-1 accuracy”. It’s about how many times our model’s top guess for a label was on point. If out of four given images, we identify a T-shirt as “T-shirt,” a sneaker as “Sneaker,” a coat as “Coat,” and a bag as “Sandal”—our Top-1 accuracy is 3 out of 4, or 75%. Obviously, we want this number to be as high as possible.minimize_metric—as the default metric is “loss,” Catalyst will try to adjust model weights till it minimizes the loss. In our case, we want exactly the opposite, so we set this parameter to “False.”

Metrics in Catalyst are implemented as MetricCallback or MultiMetricCallback objects that both inherit from Callback—a generic base class for doing something with our experiment’s state on each hook in a lifecycle (more on that when we will talk about our Inference step). Unfortunately, no clear documentation on Metrics and Callbacks is yet available, so the only way to gain insights is to look at the framework’s source code.

callback_params

callbacks_params:

accuracy:

callback: AccuracyCallback

accuracy_args: [1, 3]The value accuracy01 inside the main_metric key under state_params is a special notation that tells Catalyst to use the built-in AccuracyCallback and set it to Top-1 guesses. If we also want to examine the Top-3 or Top-5 accuracies (how many times the correct label was mentioned in the top three or top five guesses for each image), we can do it with some special syntax under callback_params. Note that it does not impact the way the best model (checkpoint) is picked; it exists for the visual feedback only.

criterion_params and optimizer_params

criterion_params:

criterion: CrossEntropyLoss

optimizer_params:

optimizer: AdamCriteria are crucial for training a neural network. Given an input and a target, they compute a gradient according to a given loss function so the model weights can be adjusted accordingly. Here we are just using a fairly standard CrossEntropyLoss criterion from PyTorch, as all of PyTorch’s built-in criteria are also accessible in Catalyst out of the box.

The same goes for optimizers, which also rely on PyTorch’s built-in optimization algorithms. Here, we are using the Adam algorithm for Stochastic Optimization, as defined in torch.optim package.

stage1

stage1: {}Anything that’s not a keyword in Catalyst config is considered a stage name. For training, at least one stage name is required. Any of the parameters described above can be overridden per stage.

As we have only one stage, we don’t need to override anything, and we leave this key empty.

Step 5: Training the model

Let’s make sure our training config looks solid, and now we can finally train the beast!

Triumphantly, open your terminal and run this command:

catalyst-dl run --config=config/train.ymlYou should see something close to this:

alchemy not available, to install alchemy, run `pip install alchemy-catalyst`.

1/3 * Epoch (train): 100% 750/750 [01:01<00:00, 12.18it/s, accuracy01=89.062, accuracy03=96.875, loss=0.346]

1/3 * Epoch (valid): 100% 94/94 [00:09<00:00, 10.14it/s, accuracy01=87.500, accuracy03=97.917, loss=0.339]

[2020-02-24 18:11:43,433]

1/3 * Epoch 1 (train): _base/lr=0.0010 | _base/momentum=0.9000 | _timers/_fps=1237.6417 | _timers/batch_time=0.0536 | _timers/data_time=0.0377 | _timers/model_time=0.0158 | accuracy01=83.2667 | accuracy03=97.8875 | loss=0.4576

1/3 * Epoch 1 (valid): _base/lr=0.0010 | _base/momentum=0.9000 | _timers/_fps=1325.1064 | _timers/batch_time=0.0972 | _timers/data_time=0.0671 | _timers/model_time=0.0301 | accuracy01=88.6553 | accuracy03=99.0304 | loss=0.3138Never mind the warning, alchemy is a tool by the Catalyst team to improve experiment logging and visualization, but we will leave out of our tutorial for this time.

We have separate metrics for our train and valid subsets of images.

accuracy01=89.062, accuracy03=96.875, loss=0.346 are our key metrics. Note that these are the results for the last batch only. After the timestamp, we see more parameters that depict state for the whole epoch as it passed.

After all the epochs have passed, here are the final results:

Top best models:

logs/checkpoints/stage1.3.pth 90.2122And we have a winner!

The best checkpoint across all epochs had a Top-1 accuracy of over 90%.

Not the best result achieved on Fashion-MNIST, but not the worst either!

Step 6: Inference

From here, we can see the finish line. As we have successfully trained and validated our model and selected the checkpoint with the best-performing weights, it is time to take the training wheels off and predict some trousers labels on our test data.

We are going to do it Kaggle-style. In a typical Kaggle competition on image classification, contestants are asked to submit a CSV file where each line stands for each entity in a test set, and the way it was categorized.

To produce such a CSV, we are going to code a custom callback for Catalyst that will replace a built-in InferCallback.

Let’s create a callbacks subfolder inside our src and put an infer_callback.py file inside.

mkdir src/callbacks

touch src/callbacks/infer_callback.pyAs usual, we start with some imports:

# src/callbacks/infer_callback.py

from catalyst.dl import registry, Callback, CallbackOrder, State

@registry.Callback

class MNISTInferCallback(Callback):

def __init__(self, subm_file):

super().__init__(CallbackOrder.Internal)

self.subm_file = subm_file

self.preds = []We need a registry module so we can make our callback known to Catalyst’s registry—this way, we’ll be able to use it from our configuration YAML. CallbackOrder is the enum of available callback order values. We are initializing our callback with CallbackOrder.Internal, which is the value used for Catalyst’s own InferCallback.

Callback is an abstract class that has the knowledge of all the events inside the stage cycle and stubbed handlers to implement for each event. Here’s the order of events inside the cycle.

-- stage start

---- epoch start (one epoch - one run of every loader)

------ loader start

-------- batch start

-------- batch handler

-------- batch end

------ loader end

---- epoch end

-- stage endState is the Catalyst class that holds inputs and outputs of our model during the experiment. state.input is passed to model.forward method, state.output is what

model.forward(state.inputs) returns.

Now, let’s define the on_batch_end handler for our callback:

# src/callbacks/infer_callback.py

# ... continued from the example above

def on_batch_end(self, state: State):

paths = state.input["paths"]

preds = state.output["logits"].detach().cpu().numpy()

preds = preds.argmax(axis=1)

for path, pred in zip(paths, preds):

self.preds.append((path, pred))state.output in our case are predictions of our model in form of logits. This is a way to store probability values for each image class on every guess. The highest values is our top guess.

Under key state.output["logits"] we will find a PyTorch Tensor with values. We need to safely extract the tensor and convert it to NumPy’s ndarray. Then we can get rid of lower probabilities and keep only the best guesses as integers with preds.argmax(axis=1).

We are also using a value from state.input["paths"] (remember, we attaching that information in a __getitem__ method in our dataset.py)

Now we just need to write our predictions to a file. For that, we will use the on_loader_end handler to dump the contents of our self.preds list after all batches have finished processing:

# src/callbacks/infer_callback.py

# ... continued from the example above

def on_loader_end(self, _):

subm = ["path,class_id"]

subm += [f"{path},{cls}" for path, cls in self.preds]

with open(self.subm_file, 'w') as file:

file.write("\n".join(subm))Our callback is ready! Now, to make Catalyst aware of it, we need to add a single line to our src/__init__.py:

# Rest of the file above

from .callbacks.infer_callback import MNISTInferCallbackNow, let’s create the config/infer.yaml—technically, it is a stage, but it is quite different from our train stage definition, plus we don’t want to run our train and infer stages together. The best practice is to put our infer stage in a separate config file:

# config/infer.yaml

model_params:

model: MNISTNet

num_classes: 10

args:

expdir: src

logdir: logs

verbose: True

stages:

data_params:

batch_size: 64

num_workers: 0

test_dir: "./data/test"

callbacks_params:

loader:

callback: CheckpointCallback

resume: './logs/checkpoints/best.pth'

infer:

callback: MNISTInferCallback

subm_file: "./logs/preds.csv"

infer: {}Besides using the different set of images for inference step (the test set of 10,000 PNGs), the main magic is happening inside callback_params: we use our callback for inference step, and we start the loader with the built-in CheckpointCallback that allows us to resume from any checkpoint of our model. We’ll be using the one with the best weights that we found at the training step.

Note that we have to name our step precisely infer, so Catalyst can work its magic and properly evaluate the model.

Finally, let’s run the inference from the terminal!

catalyst-dl run --config=config/infer.ymlThis step will take much less time than training, and you will notice the preds.csv file being created inside our logs/ folder. Here’s how it would look like:

head ./logs/preds.csv

path,class_id

data/test/9/348.png,9

data/test/9/1804.png,9

data/test/9/3791.png,9

data/test/9/1838.png,9

data/test/9/7285.png,9

data/test/9/3785.png,9

data/test/9/6825.png,9

data/test/9/7508.png,9

data/test/9/2316.png,9If you dig into the resulting CSV file further, you will see that the prediction confuses a label roughly 1/10th of the time. That corresponds to the ~90% accuracy of our model

Step 7: Production

Now that we have evaluated our model on a test dataset—it is time to deploy it to the web! We will be using Heroku for hosting: it will allow us to deploy our application with a single git push.

Heroku might not be the best platform for going big-time due to disk space limitations and a lack of GPUs, but nothing beats its ease of deployment.

What we are building

We want to deploy a simple HTTP API with a single GET endpoint that will take the URL of any image on the web as a query string and return the JSON with a prediction.

The resulting service will work like this:

curl https://fashion-mnist.herokuapp.com/predict?url=https://i5.walmartimages.ca/images/Large/_83/326/83326.jpg

{"predict":"Trouser"}We will use the Predictor class of our design that will use the best checkpoint from our training step and will not require to set up a Catalyst framework on a server.

To implement the HTTP API, we will use the FastAPI framework.

Predictor

Let’s create another directory in the root of our project: we will use it to keep our production logic.

mkdir src/predictor

touch scr/predictor/predictor.pyNow let’s define our Predictor class:

# src/predictor/predictor.py

from urllib.request import urlopen

import albumentations as A

import cv2

import numpy as np

import torch

from ..dataset import CLASSES

from ..model import MNISTNet

class Predictor():

def __init__(self, checkpoint, use_gpu=False):

assert not use_gpu, "We're not using gpu predictor in this tutorial"

self.model = MNISTNet(num_classes=len(CLASSES))

state_dict = torch.load(checkpoint, map_location="cpu")

self.model.load_state_dict(state_dict["model_state_dict"])

self.model.eval()In the constructor, we initialize our model and load provided checkpoint as its initial state. For this

tutorial we’ll be using CPU-only version of this code, but it is entirely possible to use model on a GPU if it is available on a hosting machine (for Heroku this is not the case).

Now let’s define two static helper methods to download an image from a URL, resize it, and feed to our model:

# src/predictor/predictor.py

# ... continued from the example above

@staticmethod

def _prepare_img(url):

req = urlopen(url)

arr = np.asarray(bytearray(req.read()), dtype=np.uint8)

img = cv2.imdecode(arr, -1)

img = cv2.resize(img, (28, 28)) - 255

img = A.Normalize()(image=img)["image"]

return img

@staticmethod

def _prepare_batch(img):

img = np.moveaxis(img, -1, 0)

vec = torch.from_numpy(img)

batch = torch.unsqueeze(vec, 0)

return batchFor our simple case, let’s assume the following: one request—one image—one batch—one prediction.

Finally, the predict method that returns a predicted label converted to a human-readable string as per CLASSES constant inside our src/dataset.py file:

def predict(self, url):

img = self._prepare_img(url)

batch = self._prepare_batch(img)

out = self.model.forward(batch)

out = out.detach().cpu().numpy()

return CLASSES[np.argmax(out)]Server

Let’s create the src/predictor/server.py and put the simplest possible code inside:

# src/predictor/server.py

from fastapi import FastAPI

from .predictor import Predictor

app = FastAPI()

predictor = Predictor("./models/best.pth")

@app.get("/")

def home():

return "Try to use /predict?url=url_to_image " \

"with url_to_image you want to classify"

@app.get("/predict")

def predict(url: str):

return {"predict": predictor.predict(url)}Deployment

First, make sure you’ve got a Heroku account: it takes a couple of minutes, and you don’t need to provide any payment details upfront. We will also be using only the free Heroku plan for this tutorial.

Second, download and install the Heroku CLI for your platform.

Now we would need to create three files to prepare ourselves for a push to production: Procfile that tells Heroku which process to run for a web server, requirements.txt for the production setup of Python libraries we use, and Aptfile that lists binary dependencies for opencv-python.

You can create all three files in the root of your project at the same time and then just cut and paste respective content:

touch Procfile Aptfile requirements.txt# Procfile

web: uvicorn src.predictor.server:app --port $PORT --host 0.0.0.0

# Aptfile

libsm6

libxrender1

libfontconfig1

libice6

# requirements.txt

opencv-python>=4.0

numpy>=1.17.4

https://download.pytorch.org/whl/cpu/torch-1.3.1%2Bcpu-cp36-cp36m-linux_x86_64.whl

albumentations==0.4.3

fastapi>=0.43.0

uvicorn>=0.10.8Note that we are not adding Catalyst as a production dependency—first of all, we don’t need it, as we have already trained our model locally. Second of all, Heroku imposes a 500MB limitation on application slug (code + dependencies). For the same reason, we are installing PyTorch from a wheel, as a pre-compiled binary.

Finally, we are going to extract the best of our model’s checkpoints from the default logs/checkpoints and put it into a separate /models folder. It is usually not the best practice to commit a trained model into the code repository, but as our Heroku deploy is done entirely through git—we don’t have a choice.

mkdir ./models

cp ./logs/checkpoints/best.pth ./models/As a final touch, we need to modify our src/__init__.py with a try-except block so we won’t try to load Catalyst in production:

# src/__init__.py

try:

from catalyst.dl import SupervisedRunner as Runner

from .experiment import Experiment

from .model import MNISTNet

from .callbacks.infer_callback import MNISTInferCallback

from catalyst.dl import registry

registry.Model(MNISTNet)

except ImportError:

print("Catalyst not found. Loading production environment")Now we need to make sure we add and commit all our files to git, and the git status command says that we’re clean. Finally, we are ready to deploy!

Follow these steps from the terminal:

heroku create <YOUR_APP_NAMEE> --buildpack heroku/python

heroku buildpacks:add --index 1 heroku-community/apt

git push heroku masterIf you can’t come up with a name for your application—not a big deal, just leave it blank, and Heroku will generate one for you. Wait for a couple of minutes for the application to build and note down the Heroku URL at the end of the output. Now you can send a GET request to the /predict API endpoint, provide a URL with the image of the clothing item, and get your prediction!

Congrats, you made it through our tutorial and know you have some practical knowledge about the Catalyst framework and have seen its potential for creating reproducible, production-ready deep learning pipelines! If you are just starting your dive into deep learning—I hope my previous article can also be of help.

Check out the awesome-catalyst-list repository on GitHub for more useful pointers on Catalyst.

We are also working on the second chapter of this tutorial, where we will keep building on top of a current example to introduce fine-tuning of pre-trained models, metric analysis, hyperparameter search, pipeline automation with directer acyclic graphs, data version control, distributed serving, and more.

Stay tuned and feel free to ping us if you want to talk about your deep learning needs and how we can help your team to achieve the best results.