Kubing Rails: stressless Kubernetes deployments with Kuby

Much as the ancient Greeks struggled with squaring the circle, so too do modern web developers struggle to find a convenient way to deploy their app on Kubernetes. YAML, Helm, (and the compass and straightedge for that matter) are all tried-and-true tools, but using these alone might require too many steps to accomplish the task. Some developers are okay with this! Rubyists like us, on the other hand, prefer to concentrate on the creative side of programming, and we’re always looking for ways to minimize the routine. So today, in honor of this impluse, I’d like to discuss the latest attempt to de-stressify deploying Rails apps on Kubernetes—Kuby!

For years, the Rails community has been clammering for Active Deployment, a magical out-of-the-box mechanism for deploying applications. I’m not even sure that it’s actually possible to build a framework that would abstract all the different ways to deploy applications, from bare metal to containers. But what about a particular sub-class of deployment targets? While Capistrano still works well for real servers, the world continues to move towards containerized environments, and Kubernetes in particular. Does the Ruby world have a first-class solution to deal with YAMLs and Pods? This is the question which led me to take a closer look at Kuby, which had recently popped up on my radar, in the first place.

Kuby claims to make Kubernetes accessible to everyone (not only DevOps gurus) by relying on the good ol’ convention-over-configuration principle. As an engineer who tries to avoid dealing with k8s, I found myself being lured in by this promise, and I decided to give it a try (and to give Kubernetes a second chance).

So, let’s jump right in: how can we deploy a Rails app with Kuby?

Kuby’s Quick Start guide is the best starting point, and since it’s so effective, there’s no need to reproduce it here. Let me just note the essential points:

- Choose a Kubernetes provider (I went with Digital Ocean).

- Install Docker, Kuby, and run

rails g kuby.

…and that’s it! With that, in theory, you’re ready to deploy your application right away. In practice, however, every Rails app is a unique piece of software, and there’s a high chance that the default configuration won’t cut it. That was exactly my situation when dealing with the AnyCable Rails demo. I’d like to share my Kuby journey with you, and I’ll focus on the following topics:

- Docker for development vs. Kuby for deployment

- Test flying with Action Cable

- Getting real with AnyCable via kuby-anycable

- Deploying continuously with Kuby and GitHub Actions

- What about Kuby without Rails?

Docker for development vs. Kuby for deployment

The first challenge I faced was building production Docker images while keeping all the development within a Docker environment. In other words, running kuby build from within a container doesn’t work (it fails with error: No such file or directory - build) because it needs a Docker daemon running in the system. Should I try and play some kind of Docker Matryoshka Doll game, putting Docker into Docker? That’s no fun.

Instead, I tried a different approach: separating dev tools from deploy tools. I want to run Kuby from my host system (which has Docker installed), and at the same time, I don’t want to install all the application dependencies. That’s because this might require installing additional system packages, like postgresql-dev for the pg gem, for example. So, I’m going to use the Gemfile componentization technique.

First, I created a separate Gemfile with only Kuby dependencies:

# gemfiles/kuby.gemfile

# Active Support is required to read credentials.

gem "activesupport", "~> 6.1"

gem "kuby-core", "~> 0.14.0"

gem "kuby-digitalocean", "~> 0.4.3"Now we can include it into the main Gemfile via #eval_gemfile (as we still have config/initializers/kuby.rb, which relies on Kuby):

eval_gemfile "gemfiles/kuby.gemfile"Finally, I’m adding one more Gemfile—gemfiles/deploy.gemfile:

source 'https://rubygems.org'

git_source(:github) { |repo| "https://github.com/#{repo}.git" }

ruby '~> 3.0'

eval_gemfile "./kuby.gemfile"

# To debug configuration issues

gem 'pry-byebug'As you can see, we’ve extended kuby.gemfile by adding debugging tools and configuring RubyGems.

Now, I can run Kuby like so:

# First, install gems locally

BUNDLE_GEMFILE=gemfiles/deploy.gemfile bundle install

# Now, run Kuby!

BUNDLE_GEMFILE=gemfiles/deploy.gemfile bundle exec kuby <some command>Typing BUNDLE_GEMFILE=... is boring. Let’s create a separate executable—bin/kuby—which will do that for us:

#!/bin/bash

cd $(dirname $0)/..

export BUNDLE_GEMFILE=./gemfiles/deploy.gemfile

bundle check > /dev/null || bundle install

kuby_env=${KUBY_ENV:-production}

bundle exec kuby -e ${kuby_env} $@We added bundle install inside this script to automate things, so no need to worry about that anymore.

Let’s give it a try:

$ bin/kuby build

error: No webserver named🤔 Kuby is still failing, but the error is different now: no webserver named.

Looking through the source code, I found that this exception was raised when Kuby couldn’t automatically detect a web server. How does it do that? By looking at the loaded gem specs:

def default_webserver

if Gem.loaded_specs.include?("puma")

:puma

end

endWe don’t have puma in the deploy.gemfile, so Kuby couldn’t find a web server. Looking ahead, we could also face a similar problem with the Ruby version and the Gemfile path (both are inferred from those currently in use). Luckily, we can explicitly provide all the values in the Kuby configuration:

Kuby.define("anycable-rails-demo") do

environment(:production) do

# ...

docker do

base_image "ruby:3.0.1"

gemfile "./Gemfile"

# For multi-part Gemfiles, we also need to specify additional

# paths to be copied to a Docker container before `bundle install`

bundler_phase.gemfiles "./gemfiles/kuby.gemfile"

webserver_phase.webserver = :puma

# <credentials and image registry settings>

end

# ...

end

endWith a few additional lines of code, we can now successfully build our production images via kuby build. Oh, I said images? Let’s take a slight detour and see what’s hiding under the hood of the build command.

Kuby’s Rails plugin builds two images for you: one with a Rails app (running puma ... config.ru by default) and another one with NGINX and compiled assets. You can see the contents of the dockerfiles by running the dedicated command, like this:

$ bin/kuby dockerfiles

# Dockerfile for image registry.digitalocean.com/anycable/anycable-rails-demo with tags 20211119151614, latest

FROM node:12.14.1 AS nodejs

FROM ruby:3.0.1

WORKDIR /usr/src/app

ENV RAILS_ENV=production

ENV KUBY_ENV=production

ARG RAILS_MASTER_KEY

RUN apt-get update -qq && \

DEBIAN_FRONTEND=noninteractive apt-get install -qq -y --no-install-recommends apt-transport-https && \

DEBIAN_FRONTEND=noninteractive apt-get install -qq -y --no-install-recommends apt-utils && \

DEBIAN_FRONTEND=noninteractive apt-get install -qq -y --no-install-recommends \

ca-certificates

COPY /usr/local/bin/node /usr/local/bin/node

RUN wget https://github.com/yarnpkg/yarn/releases/download/v1.21.1/yarn-v1.21.1.tar.gz && \

yarnv=$(basename $(ls yarn-*.tar.gz | cut -d'-' -f 2) .tar.gz) && \

tar zxvf yarn-$yarnv.tar.gz -C /opt && \

mv /opt/yarn-$yarnv /opt/yarn && \

apt-get install -qq -y --no-install-recommends gnupg && \

wget -qO- https://dl.yarnpkg.com/debian/pubkey.gpg | gpg --import && \

wget https://github.com/yarnpkg/yarn/releases/download/$yarnv/yarn-$yarnv.tar.gz.asc && \

gpg --verify yarn-$yarnv.tar.gz.asc

ENV PATH=$PATH:/opt/yarn/bin

RUN gem install bundler -v 2.2.22

COPY ./Gemfile .

COPY ./Gemfile.lock .

COPY ./gemfiles/kuby.gemfile ./gemfiles/kuby.gemfile

ENV BUNDLE_WITHOUT='development test deploy'

RUN bundle install --jobs $(nproc) --retry 3 --gemfile ./Gemfile

RUN bundle binstubs --all

ENV PATH=./bin:$PATH

COPY package.json yarn.loc[k] .npmr[c] .yarnr[c] ./

RUN yarn install

COPY ./ .

RUN bundle exec rake assets:precompile

CMD puma --workers 4 --bind tcp://0.0.0.0 --port 8080 --pidfile ./server.pid ./config.ru

EXPOSE 8080

# Dockerfile for image registry.digitalocean.com/anycable/anycable-rails-demo with tags 20211119151614-assets, latest-assets

FROM registry.digitalocean.com/anycable/anycable-rails-demo:20211119150731 AS anycable-rails-demo-20211119150731

RUN mkdir -p /usr/share/assets

RUN bundle exec rake kuby:rails_app:assets:copy

FROM registry.digitalocean.com/anycable/anycable-rails-demo:20211119151614 AS anycable-rails-demo-20211119151614

COPY /usr/share/assets /usr/share/assets

RUN bundle exec rake kuby:rails_app:assets:copy

FROM nginx:1.9-alpine

COPY /usr/share/assets /usr/share/nginx/assetsIf you take a closer look at the -assets image, you’ll see that we’re copying files from two images: from the Rails image which was just built (anycable-rails-demo-20211119151614) and from the previous version (anycable-rails-demo-20211119150731). This way, we keep two consecutive versions of all files (with different digests) available to users (browsers), so the deployment goes smoothly, without any “404 Not Found” errors. Some developers may be unaware of this particular deployment issue, but with Kuby, we don’t have to worry about anyway because it’s got us covered. Isn’t that cool?

Here’s another line in the second Dockerfile which caught my attention:

RUN bundle exec rake kuby:rails_app:assets:copyThis Rake task is the only reason why we need to add Kuby gems and the initializer to the application. Perhaps we can avoid this and cut the bundle’s size? 🤔

Sure we can! Let’s copy the AssetCopyTask class from Kuby and define our own Rake task:

# lib/tasks/kuby/assets.rb

class KubyAssetCopyTask

# class contents from kuby-core

#

# The only thing we need to change here is

# replace `Kuby.logger.info` with `$stdout.puts`

end

namespace :kuby do

namespace :rails_app do

namespace :assets do

task :copy do

KubyAssetCopyTask.new(

from: "./public", to: "/usr/share/assets"

).run

end

end

end

endWondering just how many megabytes we saved with this operation? Check this:

registry.digitalocean.com/anycable/anycable-rails-demo latest 977712dcef08 3 minutes ago 1.32GB

registry.digitalocean.com/anycable/anycable-rails-demo 20211117173245 b85f23c54131 2 days ago 1.53GBMore than 200MB was saved! 🙀 One reasons for this is because kuby-core depends on the helm-rb and kubectl-rb gems, which contain their corresponding binaries in the distributions. This is a pattern I wish didn’t exist in the Ruby community, but, alas.

Despite this, the image is still pretty large. Kuby supports building Alpine images as well, so if you’re feeling gutsy, feel free to set out on that journey fraught with missing dependencies and the need for other hacks. I did this for the AnyCable Demo app (see the PR) and reduced the image size down to 987MB. Frontend artifacts occupy about a third of this space: node_modules/, Yarn caches, and precompiled assets. When we manually craft Rails dockerfiles, we usually use multi-stage builds to produce all kinds of assets. We only include the necessary files in the final one. Kuby doesn’t do that. At least, not yet 😉

So, we can build and push our application Docker image to the registry. Now it’s time to deploy it!

Test flying with Action Cable

I decided to start off my Kubernetes journey with a simple application using Rails and Action Cable (instead of AnyCable).

Besides the app itself, we’ll also need a database (PostgreSQL), and a Redis instance for Action Cable broadcasting. I decided to go with a managed Postgres database provided by Digital Ocean (yeah, I’m hesitant to deploy a database that’s the heart of an application into a containerized environment). And what about Redis? Our use case here doesn’t require any persistence because we only use PUB/SUB features. So, spinning up a Redis container should work well for us.

I came across the kuby-redis gem, which sounded exactly what I was looking for. We just need to add this line of code to our configuration:

add_plugin(:redis) { instance(:cable) }I had expected some magic here, but unfortunately, that didn’t exactly pan out. First, I found that we also need to install KubeDB. Of course, there is kuby-kubedb for that! The problem is that it only supports the v1alpha1 API spec. Further, it’s not compatible with recent versions of KubeDB. Further, older versions of KubeDB aren’t compatible with the modern Kubernetes API. ⛔️ A dead end.

So, we had to fall back to a managed Redis instance from DO. Here’s the final Kuby configuration for this step:

# We need to require some Rails stuff to read encrypted credentials

require "active_support/core_ext/hash/indifferent_access"

require "active_support/encrypted_configuration"

require "kuby"

require "kuby/digitalocean"

Kuby.define("anycable-rails-demo") do

environment(:production) do

app_creds = ActiveSupport::EncryptedConfiguration.new(

config_path: "./config/credentials/production.yml.enc",

key_path: "./config/credentials/production.key",

env_key: "RAILS_MASTER_KEY"

)

docker do

base_image "ruby:3.0.1"

gemfile "./Gemfile"

webserver_phase.webserver = :puma

credentials do

username app_creds[:do_token]

password app_creds[:do_token]

end

image_url "registry.digitalocean.com/anycable/anycable-rails-demo"

end

kubernetes do

add_plugin :rails_app do

hostname "kuby-demo.anycable.io"

manage_database false

env do

data do

add "RAILS_LOG_TO_STDOUT", "enabled"

add "DATABASE_URL", app_creds[:database_url]

add "REDIS_URL", app_creds[:redis_url]

add "ACTION_CABLE_ADAPTER", "redis"

end

end

end

provider :digitalocean do

access_token app_creds[:do_token]

cluster_id app_creds[:do_cluster_id]

end

end

end

endStarting from the top, we need to run kuby setup: this adds some system-level resources to our cluster (Ingress, CertManager, and so on). After that, we can deploy (and redeploy, or reconfigure) our production application with a single command:

Deploying an Action Cable application with Kuby

It seems our deployment got stuck at the “Still waiting” (For this world to stop hating?) state. What happened? If you wait a bit more, you’ll see an error message:

[FATAL][2021-11-22 20:08:20 +0300] ActionController::RoutingError (No route matches [GET] "/healthz"):We didn’t pass the health check. So, where did "/healthz come from—and why wasn’t it found? Remember how we dropped all the Kuby gems from the production bundle? It turns out that (surprise, surprise) Kuby also adds a health-checking middleware. So, let’s bring it back! For that, we can use the rack-health gem and add a single line of code in our config.ru:

require_relative "config/environment"

use Rack::Health, path: "/healthz"

run Rails.applicationAfter adding this change and rebuilding the image, we’re finally able to successfully deploy our application!

To access the app using the hostname we provided (kuby-demo.anycable.io), we must configure our DNS records. To do that, we need to obtain the public IP addres of our cluster’s Ingress. Let’s use kubectl (via kuby) for this:

$ bin/kuby kubectl -N -- get ing

NAME CLASS HOSTS ADDRESS PORTS

anycable-rails-demo-ingress <none> kuby-demo.anycable.io 164.90.241.97 80, 443After adding a DNS A record pointing to 164.90.241.97, we can finally access our demo app, which is deployed to Kubernetes at kuby-demo.anycable.io. 🎉 Yeah, we did it!

Getting real with AnyCable via kuby-anycable

Thus far, we’ve only managed to deploy a pure Rails application with no additional services (only databases). Still, our goal is to deploy a full-featured AnyCable app. So, what should we do next?

AnyCable requires two additional services to be deployed along with the application: a gRPC server, (with the Rails application inside) and a WebSocket server (anycable-go). From the Kubernetes point of view, we need Services, Deployments, Config Maps, and so on. Sounds like a lot of stuff to write by hand, doesn’t it?

Luckily, Kuby provides an API to build custom plugins, inject them into the build and deploy lifecycles. We can hide all the necessary resources inside a plugin. This means users don’t need to worry about them, plus we can provide a canonical way to deploy AnyCable to Kubernetes.

Actually, this is the perfect time for us to introduce our new kuby-anycable plugin! Switching from Action Cable to AnyCable with it is as simple as this:

# kuby.rb

Kuby.define("anycable-rails-demo") do

environment(:production) do

#...

kubernetes do

# ...

add_plugin :anycable_rpc

add_plugin :anycable_go

end

end

endUsing

kuby-anycablesimplifies deploying an AnyCable Rails application down to just two lines of code!

And that’s it! There are only two lines of code required for deplyoment using the default configuration. Thanks to Kuby and Ruby, we can automatically infer all the required configuration parameters. Let’s take a look at some snippets from the plugin to demonstrate this.

Inferring configuration

The AnyCable RPC server is the same Rails app, only it’s attached to a gRPC server. Thus, we need to keep its configuration in sync with the web server. This could be achieved by introspecting the Kuby resources and creating an EnvFrom declaration for the :rpc container. For that, we use the #after_configuration hook:

def after_configuration

return unless rails_spec

deployment.spec.template.spec.container(:rpc).merge!(

rails_spec.deployment.spec.template.spec.container(:web), fields: [:env_from]

)

endAnyCable-Go needs to know where to find the RPC service and how to connect to a Redis instance. No problem! We can find this information, too:

def after_configuration

configure_rpc_host

configure_redis_url

# ...

end

def configure_rpc_host

return if config_map.data.get("ANYCABLE_RPC_HOST")

config_map.data.add("ANYCABLE_RPC_HOST", "dns:///#{rpc_spec.service.metadata.name}:50051")

end

def configure_redis_url

return if config_map.data.get("REDIS_URL") || config_map.data.get("ANYCABLE_REDIS_URL")

# Try to lookup Redis URL from the RPC and Web app specs

[rpc_spec, rails_spec].compact.detect do |spec|

%w[ANYCABLE_REDIS_URL REDIS_URL].detect do |env_key|

url = spec.config_map.data.get(env_key)

next unless url

config_map.data.add("ANYCABLE_REDIS_URL", url)

true

end

end

endSmart concurrency settings

Currently, AnyCable requires setting up concurrency limits on both ends: for the RPC server, we define the worker pool size (the number of threads to handle requests); for AnyCable-Go, we define the concurrency limit (the max number of simultaneous calls). We want to keep them in sync to prevent timeouts and retries. When we have one RPC server and one Go server for the default scenario, the default settings work perfectly. But what if I want to scale them independently and allow all Go apps to communicate with all RPC apps (via load balancing)? We need to carefully calculate the limit for AnyCable-Go to avoid overloading (or underloading) RPCs.

With kuby-anycable, we do all the math for you:

def configure_concurrency

return if config_map.data.get("ANYCABLE_RPC_CONCURRENCY")

return unless rpc_spec

rpc_pool_size = rpc_spec.config_map.data.get("ANYCABLE_RPC_POOL_SIZE")

return unless rpc_pool_size

rpc_replicas = rpc_spec.replicas

concurrency = ((rpc_pool_size.to_i * rpc_replicas) * 0.95 / replicas).round

config_map.data.add("ANYCABLE_RPC_CONCURRENCY", concurrency.to_s)

endAlpine vs. gRPC

AnyCable users who have decided to slim down their Docker images as much as possible usually come across an issue with installing the grpc and google-protobuf gems. To successfully do this, first of all, you’ll need some additional system packages. Secondly, you’ll need to build the C extensions for these gems from the source.

We’ve decided to make it transparent for developers to migrate from Debian to Alpine when using Kuby. Our plugin comes with a virtual package (anycable-build), which performs all the necessary steps to make Bundler work without any hacks:

Kuby.define("my-app") do

environment(:production) do

docker do

# ...

distro :alpine

package_phase.add("anycable-build")

# ..

end

# ...

end

endAdding this package results in the following lines appearing in your Dockerfile:

RUN apk add --no-cache --update libc6-compat && \

ln -s /lib/libc.musl-x86_64.so.1 /lib/ld-linux-x86-64.so.2

# Pre-install grpc-related gems to build extensions without hacking Bundler

# or using BUNDLE_FORCE_RUBY_PLATFORM during `bundle install`.

# Uses gem versions from your Gemfile.lock.

RUN gem install --platform ruby google-protobuf -v '3.17.1' -N

RUN gem install --platform ruby grpc -v '1.38.0' -N --ignore-dependencies && \

rm -rf /usr/local/bundle/gems/grpc-1.38.0/src/ruby/extDeploying continuously with Kuby and GitHub Actions

So far, we’ve been deploying the app using our local machine. That’s great for experimentation and for setting things up, but for real production use, we prefer to use automation (or to build with git push origin production).

Moving Kuby operations to CI is a piece of cake, and it consists of just three steps: kuby build, kuby push, and kuby deploy.

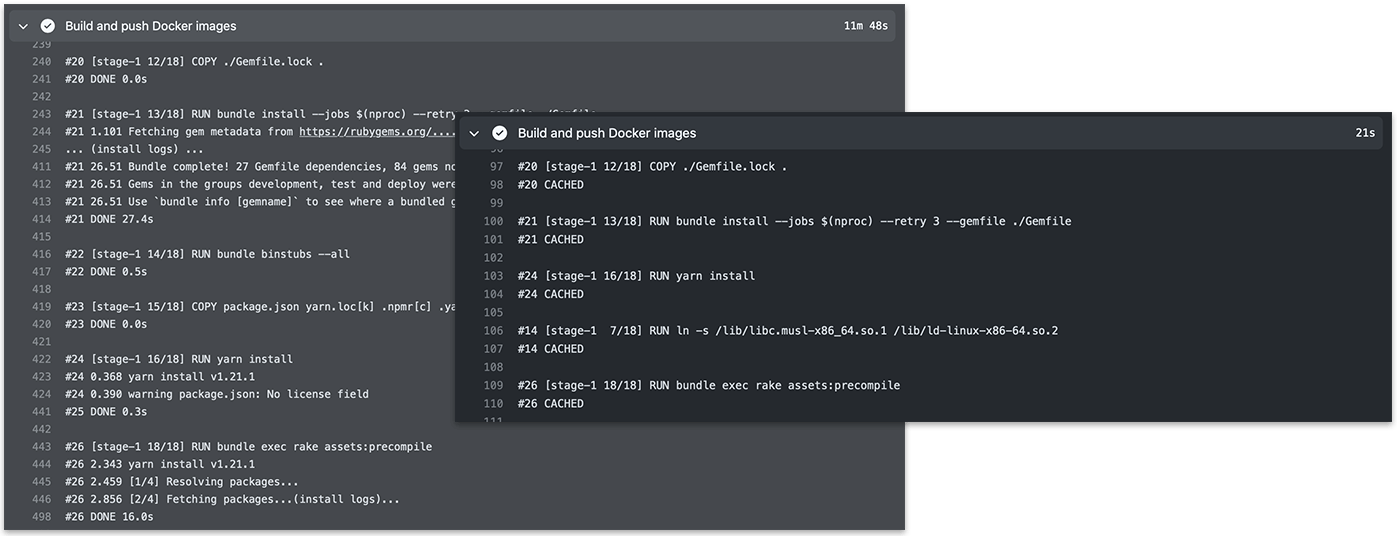

This straightforward approach has one significant drawback: building Docker images via CI is slow. 🐢 For the AnyCable demo app, building production images took ~15 minutes. I’ll show you how, by simply changing a few lines of code, we can reduce build time by up to ten times.

Building a Docker image on the CI before and after adding a cache

We recently published an article covering how to speed up Docker builds on GitHub Actions using BuildKit. We can’t apply the described techniques exactly here since we do not call Docker directly, but via Kuby. Further, since then, a built-in GitHub Actions Cache support has been added to BuildKit.

Enough talk, here is the annotated Deploy action configuration (feel free to use it):

name: Deploy

on:

workflow_dispatch:

push:

branches:

- production

jobs:

kuby:

runs-on: ubuntu-latest

env:

BUNDLE_GEMFILE: gemfiles/deploy.gemfile

BUNDLE_JOBS: 4

BUNDLE_RETRY: 3

BUNDLE_FROZEN: "true"

KUBY_ENV: production

CI: true

RAILS_MASTER_KEY: ${{ secrets.master_key }}

steps:

- uses: actions/checkout@v2

- uses: ruby/setup-ruby@v1

with:

ruby-version: 3.0.1

bundler-cache: true

# This action setups environment variables required

# to use GitHub Actions Cache with BuildKit

- uses: crazy-max/ghaction-github-runtime@v1

# Installing Buildx plugin

- uses: docker/setup-buildx-action@v1

with:

# This option is important:

# it makes `docker build` use `buildx` by default

install: true

# We're going to push images directly via BuildKit,

# not with Kuby; that's why we need to authenticate ourselves.

# NOTE: Don't forget to add `DO_TOKEN` secret to GitHub Actions.

- uses: docker/login-action@v1

with:

registry: registry.digitalocean.com

username: ${{ secrets.do_token }}

password: ${{ secrets.do_token }}

# We add `--cache-from` and `--cache-to` to the build command to enable caching.

# We also build images manually one by one (via the `--only` option): we have to use

# different cache scopes to distinguish caches for different images.

# Finally, the `--push` flag tells Docker to push images to the registry right away.

- name: Build and push Docker images

run: |

bundle exec kuby build --only app -- \

--cache-from=type=gha,scope=app --cache-to=type=gha,scope=app,mode=max \

--push

bundle exec kuby build --only assets -- \

--cache-from=type=gha,scope=assets --cache-to=type=gha,scope=assets,mode=max \

--push

- name: Deploy Rails app

# Consider adding a timeout to deploy action to avoid waiting

# too long in case of deployment misconfiguration

timeout-minutes: 5

run: |

bundle exec kuby deployWhat about Kuby without Rails?

To wrap things up, I’d like to talk a bit about Kuby in a Rails-less (or Rails-free? 🤔 Or …off-the-Rails? 🤯) context.

Kuby was designed to deploy Rails apps: the core gem (kuby-core) contains all the necessary Rails-gluing code (Rake tasks, middlewares), and the rails_app plugin is included by default (and there is no API to remove it 🤷🏻♂️). This is a relatively common situation and totally normal for a young project. Still, the community should step in and show interest in using Kuby to deploy, for example, Hanami apps. Or, maybe, we can make Kuby a universal infrastructure as code solution? I believe we can.

Can you imagine a production application without monitoring tools attached? Doubtful. At Evil Martians, we always add Prometheus/Grafana/Loki to Kubernetes installations. Installing the instrumentation stack itself is probably out of scope for Kuby (Digital Ocean, for instance, provides 1-click apps for that); conversely, connecting an application with Prometheus looks like a good fit to experiment with Rails-free Kuby.

For our demo installation, I added Ruby application metrics via Yabeda. AnyCable-Go ships with out-of-the-box Prometheus support. To see the actual data in Grafana, we need to instruct Prometheus to collect metrics from our deployments.

Kubernetes Monitoring Stack uses Prometheus Operator under the hood. Hence, to set up metrics collection, we need to create a ServiceMonitor Kubernetes resource. And unlike application resources, we must deploy it under the kube-prometheus-stack namespace.

To accomplish that, I had to extend and patch Kuby a little bit. That code is still experimental, and as such, it hasn’t been extracted into a gem yet. Nevertheless, you can find it in the demo PR. So, without further ado, let me show you the final Kuby configuration to manage the monitoring resources:

Kuby.define("anycable-rails-demo") do

environment(:production) do

app_creds = ActiveSupport::EncryptedConfiguration.new(

config_path: "./config/credentials/production.yml.enc",

key_path: "./config/credentials/production.key",

env_key: "RAILS_MASTER_KEY",

raise_if_missing_key: true

)

# This is a custom method to define an empty Kubernetes after_configuration

# (without anything related to Rails)

kubernetes_appless do

namespace do

metadata do

name "kube-prometheus-stack"

end

end

add_plugin :prometheus_service_monitor do

monitor do

spec do

selector do

match_labels do

add :app, "anycable-rails-demo"

end

end

namespace_selector do

match_names "anycable-rails-demo-production"

end

endpoint do

port "metrics"

end

end

end

end

provider :digitalocean do

access_token app_creds[:do_token]

cluster_id app_creds[:do_cluster_id]

end

end

end

endWe’ll put this code into a separate file (deploy/kuby_monitoring.rb) and run it as follows:

bin/kuby deploy -c ./deploy/kuby_monitoring.rbGreat! Now we can use Kuby to manage all the application-related infrastructure.

Isn’t this amazing? Kuby lowers the bar of adopting Kubernetes for Rails apps, leveraging the power of the convention-over-configuration principle. Just as Rails conquered the world with its “build a blog in 15 minutes” idea, so too could Kuby reign supreme in the context of deployment—“deploy Rails on Kubernetes in 15 minutes”.

Time will tell. The project is still growing, new plugins are emerging (like kuby-anycable), and I think it’s a good time to give it a try and contribute your own ideas.

By the way, at Evil Martians, we provide professional services on setting up and optimizing Kubernetes deployments. Feel free to give us a shout if that’s something you’re struggling with, or if you just need some expert advice.

P.S. I would like to thank Cameron Dutro for doing an amazing job bringing Ruby happiness to Kubernetes world.