SPA hexagon: Robust app architecture for mobile and web

See how we stepped away from the Apollo-driven design for a GraphQL-backed multi-platform frontend application and went for a robust and scalable approach inspired by Alistair Cockburn’s Hexagonal Architecture that we applied to the modern React, React Native, and TypeScript stack. If you get inspired by diagrams and love solving high-level design puzzles—this post is for you!

This article summarizes our learnings from helping an American real estate startup simplify housing and create a better co-living experience. During this collaboration, we have built two React Native applications and arrived at a generic way to build a scalable, robust, and, most importantly, cross-platform app, that we will share in this article.

The goal of applications was to allow members to access the coliving community from their devices, connect through chat, stay up to date on all the announcements, access exclusive perks and discounts, and attend and create events. The initial plan was to quickly ship a minimal viable product for the web to get the first users and then build mobile clients for iOS and Android that would have the same functionality.

At Evil Martians, we love to work iteratively, to respond faster to the changing requirements, as change is an inevitable part of the startup game. Here’s what we ended up doing:

- Quickly ship an MVP for the web in JavaScript with React, Apollo, apollo-link-state (part of apollo-link now), and without a state management library.

- Go back to the drawing board and take learnings from MVP to develop a cross-platform application where web functionality will be natively implemented for iOS and Android with React Native.

- See the project’s scope grow after the first production release and build a second application that is largely similar in features but requires a separate project started from scratch. We went to the drawing board yet again to finally arrive at a clear, scalable architecture that could serve a product development team for a long time and allow for shipping features on multiple platforms (web, iOS, Android) by avoiding code duplication as much as possible.

We will not talk much about the MVP and focus on the second and third stages as we got to try two different architectures and compare them. Both are to be described in this article.

Take one: Apollo-driven architecture

Apollo is currently the most obvious choice for every frontend developer looking to build a GraphQL-backed single-page application. It is a powerful tool that allows you to build a GraphQL-based API and then use it to build a GraphQL-based client. Apollo is perfect for hitting the ground running when scaffolding an MVP. It has a strong following and a reach ecosystem.

We had a good start with Apollo on the MVP stage of the product, so we decided to bring it into the “final” implementation. Soon we learned it had some shortcomings that only showed themselves as the product matured, but more on that later.

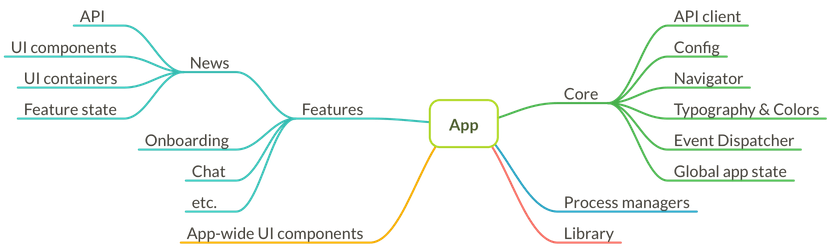

We also decided to go with TypeScript instead of plain JavaScript to catch as many bugs as possible before the code even hits the runtime. Another decision was to drop apollo-link-state as we learned it does not play well with the single responsibility and dependency inversion principles and encourages unwelcome code coupling (keeping state in the API client cache is not the best idea, in my opinion). We decided to go with Redux for state management as it allows to have things cleanly separated. Here is the mindmap of the initial architecture:

Apollo-driven architecture

The Core is responsible for configuration, connecting to the server, navigating between screens, dispatching events, and managing global state that can be used from any part of the app.

Features are isolated parts of the app, and each of them provides a single piece of functionality that knows nothing about other features and communicates with them through events. A feature can contain definitions for feature-specific GraphQL queries and mutations, a part of the Redux state related to the feature, dumb React components, and containers connected to the state and API.

In this design, the data that comes from the GraphQL backend bypasses the Redux state to arrive directly into the Apollo HOC (Higher Order Component) like so:

interface IProps {/* skipped */}

type TAllProps = ChildProps<IProps & IPostsQueryProps>;

class PostsList extends Component<TAllProps, IState> {

public render() {

const { postsData } = this.props;

if (postsData.isLoading) return <ScreenLoader />;

return (

<FlatList

data={postsData.posts}

renderItem={this.renderPost}

refreshing={this.state.isRefreshing}

onEndReached={postsData.fetchMore}

{/* skipped */}

/>

);

}

// skipped

}

export default compose(withPostsQuery())(PostsList);The process managers are implemented with redux-saga and manage different flows like user authentication, gathering analytics, navigation between screens, push notifications, etc.

The library directory contains some helper functions and wrappers around React Native related libraries.

At first glance, the described approach seemed reasonable and working. We had loose coupling between different app features and high cohesion inside them. That allowed us to change something inside a feature and not to worry if we broke something.

However, during further development, some difficulties arose.

Apollo mission problems

First of all, there were a bunch of issues with Apollo and its internal cache. Every time we encountered an Apollo bug, we had to spend some time to find its cause and faced an expensive dilemma: dig into the library, make a pull request and cross fingers, or find a workaround.

For example, I had wasted almost a week fixing a bug with infinite scrolling when a new portion of data appeared and disappeared repeatedly. You can take a look at how it looked in the iOS simulator:

Apollo issue

I thought the issue was caused by React Native’s FlatList or something like this. It took other Martians and me some time to realize that the problem was in the order of requests and responses in the Apollo code. Probably, it is fixed by now, but it did cost us valuable time.

Because we chose the component-based approach to communicate with the server, we couldn’t just quickly swap Apollo for another library.

Isolating sub-states for each feature made it difficult for us to share state between features when necessary. There was no place where we could place such states to DRY them out, so we needed to sync through events and process managers. That caused some bugs, too.

Although the mobile app was a clone of the web application, it was still a separate codebase, so we still had to copy and paste code to reuse it, making adjustments for React Native in the process.

In other words, we had loose coupling between the app features but had strong coupling between the API and UI layers.

We had almost identical API queries strongly coupled with React and React Native components, along with different approaches to state management at different platforms. When we needed to port something from the web app into the mobile app, we had to write all the UI components again and rewrite the state management code to make it work—not the most productive approach.

Besides these issues, we had some problems with performance because of nested Apollo higher-order components, unpredictable re-rendering, and due to the usage of styled-components—sometimes they increased the rendering time up to 2-5 times for every React component. So we spent some time fixing these issues, too.

And, of course, as we finally fixed all the bugs and released the first version of the production application, the product requirements evolved too: now we had to ship a much more advanced cross-platform app for iOS and Android in just six weeks!

Take two: Building a Hexagon

After mixed success with Apollo and given the tight schedule and the increased scope, we took a bold decision to start from scratch yet again, this time by setting clear goals from the get-go:

- To make it easy to refactor the app and replace any part of it.

- To increase maintainability and reduce the probability of making bugs and accruing technical debt.

- To make code as reusable between different platforms as possible.

- To make the app fast and smooth.

- To make it easy to implement the offline mode.

If we see the application as a toolbox, then the architecture is the layout of all the drawers, boxes, and holding pens where the good stuff will go. To arrive at a good architecture, we need to focus on organizing things and setting boundaries between different tools, so they don’t get mixed up. So it is time to forget about libraries themselves: React, Redux, Apollo, MobX, you name it, are just the building blocks.

The goal was to build an application where these blocks can be constructed, replaced, refactored, and debugged separately. Such an approach reduces development and testing time, gives us high maintainability, and reduces the technical debt. Adding new code doesn’t require massive changes, debugging requires as few workarounds as possible, and testing is less cumbersome.

As I had a full carte-blanche to work on a high level and had the power of the Martian team behind me to build an actual implementation, I decided to try something I was considering for a long time: implement the Hexagonal Architecture by Alistair Cockburn, one of the great minds behind The Manifesto for Agile Software Development.

Judging from all my experience, it seemed a reasonable choice for SPAs and React Native apps, and it was exactly what I wanted from a robust architecture. All I had to do is to adapt it a bit.

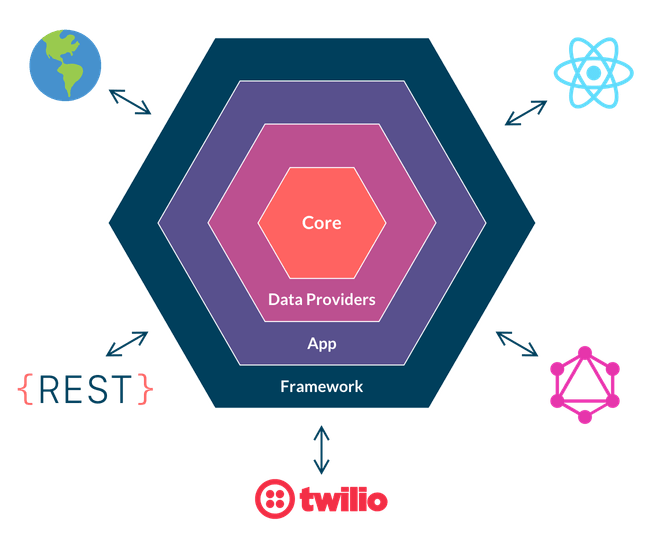

According to Cockburn, hexagonal architecture consists of multiple layers. Each layer only has a boundary between itself and an outside layer and is decoupled from other ones and knows nothing about them but defines some contracts.

I decided to break the app into the core, data providers, app, and framework layers.

In our case, contracts are TypeScript interfaces that define possible ways to interact with the layer in question. We place such interfaces into interfaces.ts files inside each layer. The rule of thumb is to use interfaces whenever we need multiple implementations of the came concept. Also, it is a good idea to define an interface when we want to abstract from a low-level implementation and avoid mixups between layers.

An example of a contract for a news data provider might look like this:

interface INewsDataProvider {

fetchPost: (id: string) => Promise<FetchPostResult>;

fetchPosts: (limit?: number) => Promise<FetchPostsResult>;

}To transfer data and keep the layers and other parts of the code uncoupled, we define and use DTOs (Data Transfer Objects). We define them as TypeScript interfaces as well.

The movement inside the hexagon goes from the outside layer in: we inject dependencies into each layer from an encircling one. The dependencies must implement the corresponding interfaces defined in the inner layers.

Using interfaces for the parts of our application that may change is a way to isolate the changes. With interfaces, we can create new implementations or add more functionality around an existing implementation as needed without fear of breaking the app.

Next, I’ll go through all the layers, describe their purpose, and then show you how we implemented each of them.

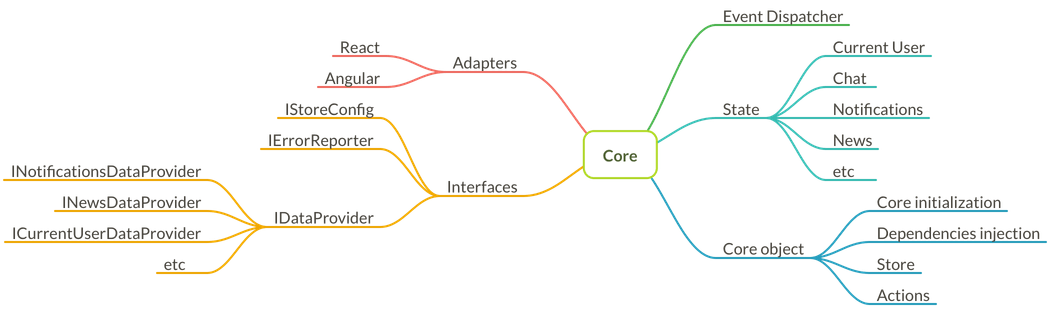

Core layer

This layer is the essence of the app—it contains the business logic, keeps all the data we need, and defines how the outside layers can interact with it. The core must know nothing about UI or API implementations. It doesn’t care if its dependency is implemented in the nearest outer layer or any further layers.

The only thing the core cares about is if the dependency implements an interface defined in the core. Such an approach allows to test and write code without distractions.

We can even extract the core as a separate library to reuse it for another platform at any moment.

The core layer

Isolation of the core layer also makes fantastic things possible, like the one described in “The curious case of reusable javascript state management”:

“There were cases where we had a very large initial state and could hydrate our state in a nodejs Lambda and send the reconciled state to the UI, so we did very little computation on the browser. This was only possible because our state was a separate package that could be imported into any javascript environment”.

To make computations and change the state, we define commands in the core and export them for use in the outer layers. Each command is an “action” in terms of business logic. Examples of such commands are “Update the user’s name”, “Get the latest news”, “Select a profile to show”.

When it’s unclear whether some data and code should go into the Core layer or the app’s UI, it is useful to understand the difference between the business logic and the graphical interface that lets users interact with the logic.

It might be helpful to pretend that we have not one but two apps: a mobile app and a web app with the same core logic but a possibly different UI implementation.

Michel Weststrate, the author of MobX, says how to decide what should go into the core logic in his article “UI as an afterthought”, an excellent post, by the way:

“Initially, design your state, stores, processes as if you were building a CLI, not a web app… Nothing beats the simplicity of invoking your business processes directly as a set of functions”.

Data Providers layer

The essence of this layer is to be a mediator between the core layer and the outer layers.

We don’t import any code from the app and framework layers into this one and don’t place any low-level implementations here. We can import code from the core layer, however.

The Data Providers layer

The data providers layer contains implementations for the interfaces defined in the core. We use the implementations to make requests to the API client and persistent storage and provide data to the core layer.

Along with the implementations of the core interfaces, the layer can contain definitions of the IApiClient and IPersistedStorage interfaces that we should implement at the app layer or framework layer. We need them to make requests to the outer world without knowing about the low-level implementations of the interfaces.

Additionally, we can place schema validations and data converters in this layer to validate the data from the server and avoid runtime errors.

Like the core layer, the data providers layer can be reused to implement a web app without changes. In such a case, we might want to extract it into a separate package.

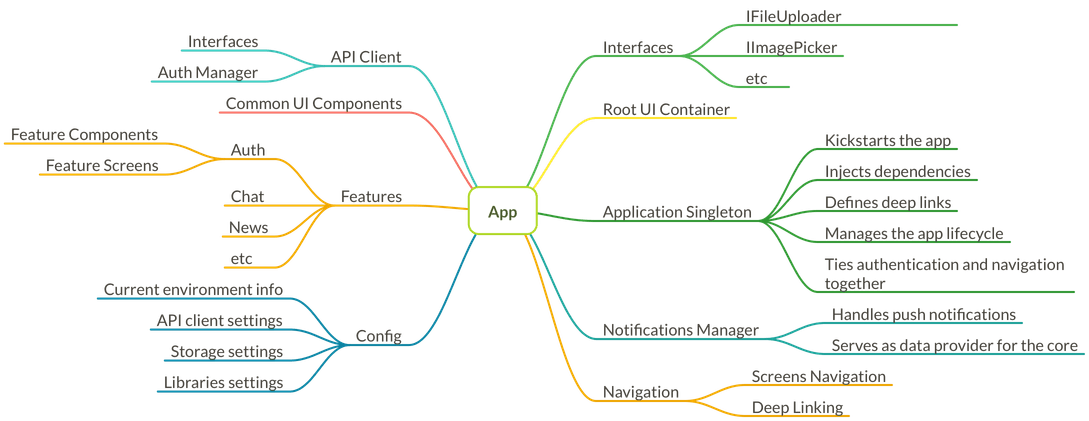

Application layer

The application layer contains the implementation of the app itself. Here, we place the navigation, the UI, push notifications manager, the Application singleton to manage the app’s lifecycle, and the code that glues all the parts together.

We can import code from the core, data providers, and framework layers into this layer.

The Application layer

In the Application singleton, we instantiate data providers, storage, the API client, and other stuff and inject them into the inner app layers: the core layer and the data providers layer.

The UI is split into features, features themselves consist of screens. Usually, a feature contains two directories: screens and components. The feature’s components folder contains only the feature-related components.

We keep the UI as thin as possible, move all the business logic and data into the core. However, we can use a UI component internal state to keep its data if it does not make sense outside of the component.

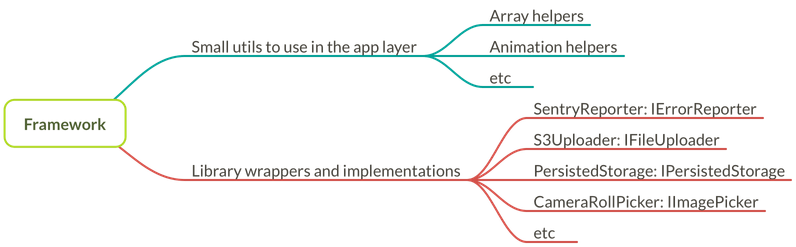

Framework layer

This layer is intended to keep adapters to various libraries used by our application. Examples of such adapters are error reporters, file uploaders, persisted storage implementations. You shouldn’t use a library directly in the app. Write adapters instead to make it easy to ditch a library if you have to.

The Framework layer

When implementations are encapsulated and follow defined interfaces, it becomes much easier to add, modify, or replace libraries and extend the framework.

We can place some utilities into this layer (e.g., array or animation helpers) without implementing an interface. We have to ensure we import them into the app layer only and don’t use them in the inner layers. If we need to import something into an inner layer, we have to define the corresponding interface and implement it at the framework layer.

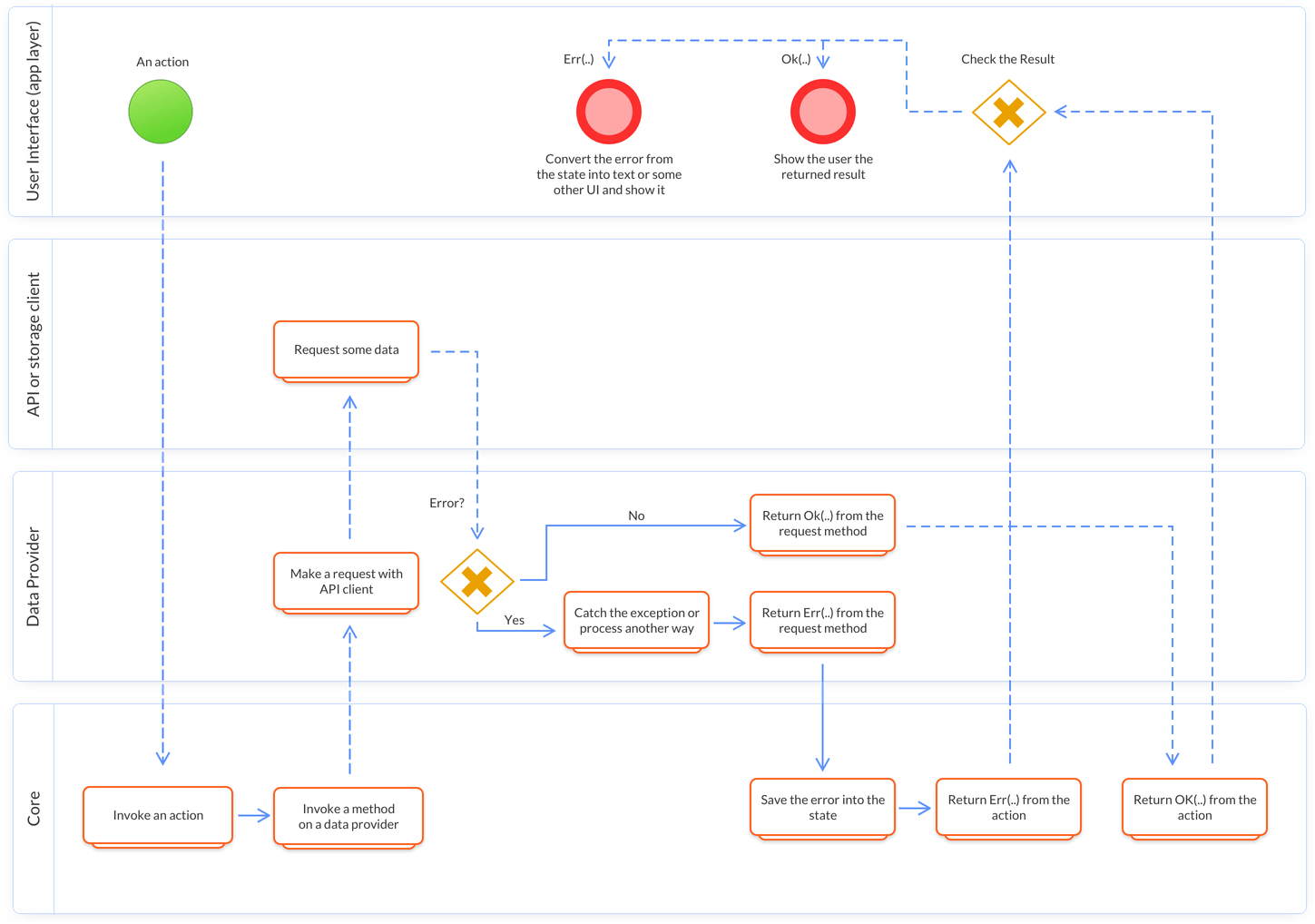

Error handling

No architecture can be robust without a well-defined error handling flow. We made a few attempts to ponder at it and did some experiments before we achieved the flow we wanted.

We allow exceptions in low-level implementations only: failed network requests, unexpected errors in the native code or third-party libraries, and so on. Besides, there can be implicit exceptions when the server returns 200 OK, but the response body contains an unexpected error.

Every time an exception occurs, we must handle it and convert it into the corresponding error type defined in the core layer.

To define whether an operation was successful or not, it’s convenient to use the Result type. We define such types in the core layer along with the error types and use them in data providers and the app layer.

Here is how the flow looks like:

The error handling flow

If an error occurs in the app layer, we don’t save it into the core and process it in the UI to keep things simple. That is usually a case when we use a library from the framework, and it throws an exception or calls an error callback.

The implementation

Once we defined the architecture, we had to choose the tools to implement it and build the app.

The first and primary tool for every application is the language to write the app in. We picked TypeScript because it is statically typed, has interfaces, and we wanted to catch as many bugs at the development time as possible.

To implement the core, we had chosen Redux. The UI is implemented with React—the app is being written with React Native. However, our architecture allows us to swap the libraries without touching any other code, if necessary.

Structure of the core

We have some important parts here: the state, an event dispatcher, public interfaces definitions for the layer, the adapter to connect the core to React (we can add other adapters if we have to), and the Core object to initialize the core and interact with the state directly.

To make Redux actions and reducers type-safe and avoid stupid bugs, we wrote some helpers to infer types. The type definitions added some boilerplate, but we resolved this problem with code generators using Hygen.

The state is divided into sub-states. Sub-states must know nothing about each other.

Here’s how the state directory looks like:

├── state

│ ├── actions.ts # actions object to use in the `Core` object

│ ├── common # models and interfaces to use in multiple sub-states

│ │ ├── index.ts

│ │ ├── interfaces

│ │ ├── models

│ │ └── samples.ts

│ ├── aTypicalSubstate # sub-state

│ │ ├── __tests__ # unit tests

│ │ │ ├── actions.ts

│ │ │ └── state.ts

│ │ ├── actions.ts # Redux actions and types

│ │ ├── errors.ts # error definitions

│ │ ├── helpers.ts # optional helpers module

│ │ ├── index.ts # umbrella module to export public definitions

│ │ ├── interfaces.ts # public interfaces

│ │ ├── state.ts # the sub-state itself

│ │ └── validators.ts # optional module with validators

│ ├── global.ts # the `IGlobalState` interface definition to use in sub-states

│ ├── rootReducer.ts # all the sub-states collected in a single object

│ └── selectors.ts # global selectors which use data from several sub-statesActions

We define our actions as classes for type safety’s sake. To make it work properly with Redux, we convert them to plain objects in middleware.

export enum Types {

RESET = "CURRENT_USER/RESET",

SET = "CURRENT_USER/SET",

}

class ResetAction implements IAction {

public readonly type = Types.RESET;

}

class SetCurrentUserAction implements IAction {

public readonly type = Types.SET;

public constructor(public data?: ICurrentUserData) {}

}Once we defined the actions, we can use them in the Actions map to infer types and bind the action creators with the dispatch function—just the usual Redux stuff with the corresponding types.

Every async action returns a promise containing the result. At this point, all the possible exceptions are already handled by the corresponding data providers. So we can take the result, unwrap it, dispatch the actions, and return the result to use in UI later.

Here’s how the Actions object can look like:

export const Actions = {

reset: () => new ResetAction(), // Action creator for ResetAction

// An async action

fetchCurrentUserData:

() =>

async (dispatch, _, { currentUser }) => {

// Here we use the injected data provider implementation

// for the `ICurrentUserDataProvider` interface

const result = await currentUser.fetchCurrentUser();

// The data provider returns the `Result` type

// which we have to unwrap first to get the data

if (result.is_ok()) {

const data = result.ok();

dispatch(new SetCurrentUserAction(data));

}

// We return the result to use it in UI components to see if there is an error

return result;

},

};

// `ActionTypes` is a union type with all the actions.

// We use it in a reducer to infer actions types

export type ActionTypes = ActionsUnion<ActionCreatorsMap>;

// `ICurrentUserActions` is the final action creator's map type without the unnecessary information about internals which we export from the core and use in the outer layers.

export type ICurrentUserActions = MakeBoundActionCreatorsMap<ActionCreatorsMap>;

// The namespaced actions type to use in exports

export interface INamespacedCurrentUserActions {

currentUser: ICurrentUserActions;

}

// Create actions and export them to use outside

export const mapDispatch = (

dispatch: Dispatch

): INamespacedCurrentUserActions => ({

currentUser: bindActionCreators<typeof Actions, ICurrentUserActions>(

Actions,

dispatch

),

});State

When the actions are defined, we can implement the state and the update function (a reducer, in terms of Redux). We use Immer to easily and immutably update the state and Reselect to create memoized selectors for the stored and computed data.

import { ActionTypes, Types } from "./actions";

export interface IState {

// The current user state definition

}

// The namespaced state definition to create the namespaced state object

// to merge with the global app state

export interface ICurrentUserState {

currentUser: IState;

}

export const initialState: IState = {

id: undefined,

//...

};

export const update = immer((draft: IState, action: ActionTypes) => {

switch (action.type) {

case Types.SET: {

// We pass the data using a DTO

const { data } = action;

// Thanks to Immer, we can just assign the data

if (data && data.user) {

draft.id = data.user.id;

draft.someData = data.user.someData;

}

return;

}

case Types.RESET: {

return initialState;

}

}

}, initialState);

const currentUserSelector = (state: { currentUser: IState }): IState =>

state.currentUser;

// A selector to select the stored and computed data

// To compute data, we user memoized `createSelector` from `reselect`

export const getStateWithDerivedData = createSelector(

currentUserSelector,

(currentUser): ICurrentUserState => ({

currentUser: {

...currentUser,

// Here we can place some computed data

},

})

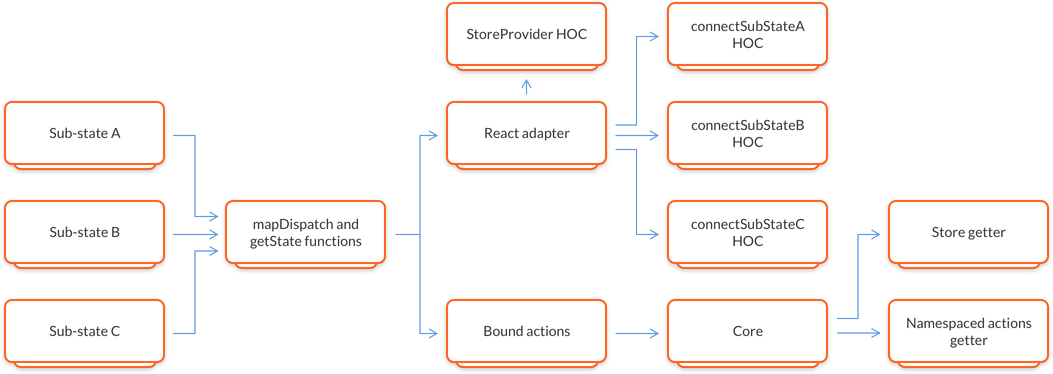

);React adapter

To use all the sub-states in React components, we implemented an adapter for React. It imports all the sub-states definitions and their actions, connects them to the Redux store, and exports a connected higher-order component for every sub-state.

To use the state and actions outside the UI, we defined the actions getter on the Core object, which returns an object with namespaced actions for each sub-state, and the store getter, which returns the Redux store object.

Exporting state and actions through the React adapter and the Core object

Here’s how we implemented the React adapter. We define types and build the corresponding HOCs for each of our sub-states and merge them into the global Redux state. Also, we make the store provider component here to provide UI components with our data.

import * as currentUser from "./state/currentUser";

//... and other imports

// Namespaced state type definition.

// The state and its actions will be placed under the `currentUser` key in the global state

export type ICurrentUserProps = currentUser.ICurrentUserState &

currentUser.INamespacedCurrentUserActions;

// Connected Redux higher-order component

export const connectCurrentUserState = connect(

(state: IGlobalState) => ({

currentUser: {

...currentUser.getStateWithDerivedData(state).currentUser,

profile: currentUserProfileSelector(state),

},

}),

currentUser.mapDispatch,

// Merging our sub-state into the global state

(state, actions, props: object): ICurrentUserProps => ({

...props,

currentUser: { ...state.currentUser, ...actions.currentUser },

})

);

// ... Exports for the rest of the connected components

// Store provider to wrap the root UI component

export const StoreProvider = ({ children }) => (

<Provider store={getStore()}>{children}</Provider>

);The Core object definition is pretty simple. It just re-exports the store and actions and calls some internal stuff. Also, we define all the dependencies and options using the ICoreInitOptions interface.

We call the Core.init method from the Application singleton and inject the dependencies while bootstrapping the app.

interface ICoreInitOptions {

config: IStoreConfig;

dataProvider: IDataProvider;

dispatcher: EventDispatcher<CoreEvents>;

errorReporter: IErrorReporter;

afterInitCallback: () => unknown;

}

export const Core = {

init(options: ICoreInitOptions): void {

// We inject all the passed dependencies and configure the store here

},

get actions() {

// Return the namespaced actions of every sub-state

// to use outside React

},

get store() {

// Return the Redux store object to use outside React

},

resetState() {

// Reset all the sub-states

},

};Data providers in detail

We have a GraphQL backend, so the data providers layer has definitions of the queries and mutations. We decided not to use Apollo or any other third-party GraphQL client anymore. Instead, we made a small wrapper around the Fetch API, put the implementation in the API client in the app layer, and use it every time we want to make a query from a data provider.

This layer is the only place outside of the framework layer where exceptions are allowed. Here we catch failed network requests, unexpected errors in the native code, and all the possible exceptions and server errors and wrap them into the Result type.

The data providers’ concept makes it easy to implement offline mode with predictable behavior in the app. Here is how can we implement a data provider to fetch the news and fallback to the local cache:

// The `INewsDataProvider` is defined in the core

export class NewsDataProvider implements INewsDataProvider {

private static readonly storageKey = "news/posts";

// We inject our API and local storage clients here

public constructor(

private client: IApiClient,

private storage: IPersistedStorage

) {}

private readPostsFromCache(): Promise<IPost[] | undefined> {

return this.storage.getItem<IPost[]>(NewsDataProvider.storageKey);

}

private async writePostsToCache(posts: IPost[]): Promise<void> {

return this.storage.setItem(NewsDataProvider.storageKey, posts);

}

private async fetchPostsFromCache(limit: number): Promise<FetchPostsResult> {

const cache = await this.readPostsFromCache();

return cache

? Ok(cache.slice(0, limit))

: Err(new NewsError("transportError"));

}

private async fetchPostsFromAPI(limit: number): Promise<FetchPostsResult> {

try {

const response: IFetchPostsQueryResult = await this.client.query(

fetchPostsQuery,

{ limit }

);

return Ok(response.posts);

} catch (error) {

return Err(new NewsError("transportError"));

}

}

// First, we try to fetch posts from the server and save them to the cache

// Of something goes wrong, we try to fetch the posts from the storage

public async fetchPosts(limit = 20): Promise<FetchPostsResult> {

const result = await this.fetchPostsFromAPI(limit);

if (result.is_ok()) {

this.writePostsToCache(result.ok());

return result;

} else if (result.err().kind === "notFound") {

return result;

}

return this.fetchPostsFromCache(limit);

}

}The Application singleton

We implemented the app layer using React, React Navigation, some libraries from React Native and wrappers from the framework layer.

The heart of the layer is the Application singleton. It launches the app, injects dependencies, and glues all the parts together. Simplified, it is defined this way:

export class Application {

private client?: ApiClient;

private notificationsManager!: NotificationsManager;

public readonly config = new Config();

public readonly dispatcher = new EventDispatcher<AppEvents>();

public readonly storage!: IPersistedStorage;

public readonly errorReporter!: IErrorReporter;

public readonly dataProvider!: IDataProvider;

// Inject dependencies for the inner layers

public constructor() {}

// Inject dependencies for the inner layers, subscribe to core events

public init(): void {}

// Fetch the user data and navigate to the proper screen

public handleLogin = async () => {};

public get apiClient(): ApiClient {

return this.client || (this.client = this.buildApiClient());

}

private buildApiClient(): ApiClient {

const actions: IApiClientActions = {

onLogin: () => this.onLogin(), // E.g., store the access token

onLogout: () => this.onLogout(), // E.g., clean the user data and token

getUploader: (...args): IUploader => new AWSUploader(...args),

};

return new ApiClient(this.config, actions, this.storage);

}

}More about the framework layer

This layer contains implementations for the interfaces defined in the core, data providers, and app layers. When implementations are encapsulated and follow defined interfaces, it becomes much easier to add, modify, or replace libraries and extend the framework.

Also, we put here some simple utils to use in the app layer.

Once we bootstrapped the architecture for the app, it was quite easy to build the app itself with a team of five in just a month.

With the hexagonal architecture and TypeScript, we’ve got an extensible, fast, and responsive app with a smooth user experience and almost no runtime errors. It is now easy to make changes in the app, add offline support, write tests, and reuse the business and data fetching logic if we need to implement another app.

We hope you will make good use of this article once you have to solve architecture-related challenges in your upcoming React projects, even though this approach does not limit itself to React, or, in fact, any library, as it allows you to transcend the limitation of the frameworks and make your project forever more scalable.

Feel free to give us a shout if you need to enlist Martian frontend engineers to help you design and build your next web or mobile application that will be easy to maintain after the collaboration is over, or if your team could use some help with establishing the best practices for the future.