Hey, AnyCable speaking! Needing help with a Twilio-OpenAI connection?

The last 20 years has seen cascading tech revolutions, especially with how we communicate with one another. The mobile revolution! The smartphone revolution! The AI revolution(?) Things have really changed, but traditional phone calls are also still here, despite the evolving tech. Sure, we sometimes have to escalate through human representatives to reach the person who can help us, “press 2 for billing”, or something else like this. But that means there’s room for us to dial up our services another notch! So, let’s talk about about enhancing the over-the-phone UX with modern AI and realtime technologies, like OpenAI, Twilio, and AnyCable!

Despite the popularity of text messaging tools, phone calls still remain the main customer service communication method. Sometimes you’re talking to a human, other times you’re interacting with an algorithm and some accompanying state machine.

Of course, these days, it’s also increasingly likely you’ll hear an AI assistant on the other end of the line. And the rise of these AI voice assistants are ringing in an entirely next-level landscape for the call center industry as a whole—with ramifications for parties on both sides.

Those impacts? For customers, these voice assistants provide a much higher quality and humane experience than the previous-generation of automation tools. For the companies utilizing the assistants, the addition of AI empowers them to blow past previous scalability limitations, thus allowing them to grow faster.

Irina Nazarova CEO at Evil Martians

The rate of AI-facilitated automated interactions with customers is projected to increase by 5x, reaching approximately 10% by 2026, compared to 1.8% in 2022. (Gartner)

OK, users, companies, and AI. But what about us?

For engineers like us, all of this means that the task of integrating voice support agents into our products will become more common. This means we need to know how to effectivley approach this task, the tech to use, and the service architectures to employ.

So, let’s do a quick rundown of where we stand today: a typical stack for voice applications is likely to include Twilio. For the AI part, OpenAI is a solid choice, especially since the introduction of the Realtime API.

But it’s not enough—something is missing.

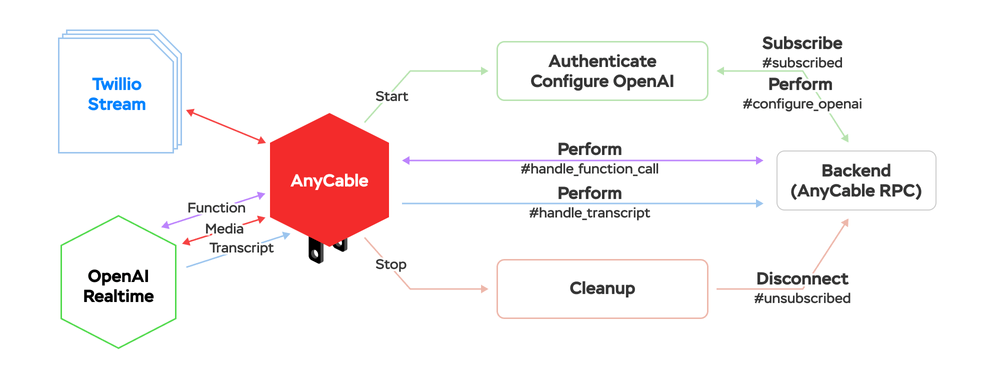

We need a technology that can connect those two things (Twilio and OpenAI), integrate them into an application, and spare engineers the need to deal with all the low-level stuff (like WebSockets) so that we can focus on actual product development instead.

In fact, this technology already exists …and it’s called AnyCable.

So, hopefully the signal is good and you’re reading me loud and clear, because throughout the rest of this post, I’d like to demonstrate using AnyCable as a bridge between your application and Twilio/OpenAI in order to help you build quality AI voice assistants.

We’ll cover the following topics:

- AnyCable for Twilio Streams

- Press “one”, or pre-AI telephony UI

- Real(-time) conversation via OpenAI

- Closing thoughts and future ideas

AnyCable for Twilio Streams

Twilio provides a feature called Media Streams that allows you to consume a phone call as a stream of events and audio packets sent over WebSockets. Consider it like a webhook over WebSockets (so, WebSocketHook). Additionally, the stream is bi-directional, which means that you can also send audio to the other end of the line—just what we need to build a voice assistant!

Meanwhile, AnyCable is a realtime server that supports different transports (WebSockets, SSE, and so on) as well as various protocols (like Action Cable, GraphQL, and more).

AnyCable seamlessly integrates with your existing backend, so you can offload low-level realtime functionality to a dedicated server and focus on your product needs. Although AnyCable doesn’t have built-in support for Twilio Media Streams protocol (yet), you can quickly implement one on top of AnyCable.

In an earlier article, “AnyCable off Rails: connecting Twilio streams with Hanami”, we provided a step-by-step guide on writing a custom AnyCable server with Twilio and speech-to-text capabilities.

Our previous server supported only consuming and analyzing media streams, that is, it only worked in one direction. But this time, we’ll make it bi-directional!

It might seem like we’ve got a lot on our plate

For today’s tutorial, we’ve built a demo application with Ruby on Rails called “On your plate”. It’s a minimalistic task management tool (which may or may not actually include any tasks related to food), that is, a weekly planner powered by Hotwire and AnyCable to ensure a smooth, realtime user experience. Take a look at the app:

Our demo weekly planner app

We’ve got the backend application ready and we’ve also bootstrapped a new AnyCable application using the official project template. Further, we’ve copied the Twilio protocol implementation from our previous demo.

In the end, the baseline project structure looked like this:

app/

channels/twilio/ # <- where all the call management logic lives

application_connection.rb

media_stream_channel.rb

controllers/

twilio/

status_controller.rb # <- handles Twilio webhooks

phone_calls_controller.rb # <- call monitoring dashboard

# ...

models/

# ...

cable/ # <- where our AnyCable application lives

cmd/

internal/

pkg/

cli/

twilio/

encoder.go # <- converts Twilio protocol to AnyCable protocol

executor.go # <- controls media streams

twilio.go # <- Twilio message format structs

# ...On the Rails side of things, all of the logic required to manage phone calls lives in the Twilio::MediaStreamChannel class. Here, we use Action Cable channels as an abstraction to control media streams as it suites the realtime nature of the communication well and is supported by AnyCable out-of-the-box:

module Twilio

class MediaStreamChannel < ApplicationChannel

# Called whenever a media stream has started

# (i.e., a call has started)

def subscribed

broadcast_call_status "active"

broadcast_log "Media stream has started"

end

# Called whenever a media stream has disconnected

# (i.e., a call has finished)

def unsubscribed

broadcast_log "Media stream has stopped"

broadcast_call_status "completed"

end

end

endIn a moment, we’ll reveal the meaning of the #broadcast_call_status and #broadcast_log methods. For now, let’s talk about the other side of the cable: our AnyCable Go application.

The twilio.Executor manages Twilio Media Streams on the Go side; take a look at the HandleCommand(session, msg) function (Go’s infamous verbose error handling is omitted here and everywhere below):

func (ex *Executor) HandleCommand(s *node.Session, msg *common.Message) error {

// ...

// This message is sent to indicate the start of the media stream

if msg.Command == StartEvent {

start := msg.Data.(StartPayload)

// Mark as authenticated and store the identifiers

callSid := start.CallSID

streamSid := start.StreamSID

identifiers := string(utils.ToJSON(map[string]string{"call_sid": callSid, "stream_sid": streamSid}))

// Make call ID and stream ID available to the Rails app

// as connection identifiers

ex.node.Authenticated(s, identifiers)

// Subscribe the stream session to the MediaStreamChannel.

// That would trigger the #subscribed callback.

identifier := `{"channel":"Twilio::MediaStreamChannel"}`

ex.node.Subscribe(s, &common.Message{Identifier: identifier, Command: "subscribe"})

return nil

}

// This message carries the actual audio data.

// We'll talk about it later.

if msg.Command == MediaEvent {

// ...

}

// ...

return fmt.Errorf("unknown command: %s", msg.Command)

}Finally, let’s instruct Twilio to create media streams for phone calls. We can respond with a TwiML instruction to a status webhook event and we manage webhooks in the Rails application. Here’s a simplified version of the code:

module Twilio

class StatusController < ApplicationController

def create

def create

status = params[:CallStatus]

broadcast_call_status status

if status == "ringing"

return render plain: setup_stream_response, content_type: "text/xml"

end

head :ok

end

private

def setup_stream_response

Twilio::TwiML::VoiceResponse.new do |r|

r.connect do

_1.stream(url: TwilioConfig.stream_callback)

end

r.say(message: "I'm sorry, I cannot connect you at this time.")

end.to_s

end

end

end

endTo see the code above in action, we should launch both applications (Rails and AnyCable) and set up localhost tunnels (for example, via Ngrok) so that Twilio can send us webhooks and media streams, and make a call to our Twilio number!

For debugging and monitoring purposes, we’ve also built a monitoring dashboard powered by Hotwire Turbo Streams—and that’s the purpose of the #broadcast_xxx methods.

Phone calls monitoring dashboard

This is our baseline: our AnyCable Go application can consume and understand Twilio Media Streams and communicate with the main (Rails) application. Now, let’s translate this ability to communicate into actual features!

Press “one”, or the pre-AI telephony UI

Alright, alright, let’s not jump into the brave new world of artificial intelligence right away. Instead, we’ll gradually explore the technical capabilities of our baseline setup to get a more comprehensive understanding of what we’re doing here, then go from there.

Let’s first model possible scenarios using some less sophisticated communication tools, for instance, the phone’s keypad, and then progressively enhance from there to conversation-driven interactions.

We’ll start by implementing the following feature (presented as a dialogue below):

- Hi, let's see what's on your plate.

Press 1 to check tasks for today.

Press 2 to check tasks for tomorrow.

Press 3 to check tasks for the whole week.

*Pressed 1*

- You don't have any tasks for today.The feature could be sub-divided into two technical tasks:

- Sending audio packets from the Rails application to a media stream.

- Handling phone keypad events (dual tone multi-frequency signals, or DTMF) in the Rails application.

Let’s walk through them both, one by one.

Sending audio to Twilio Streams

From the AnyCable and Rails (Action Cable) points of view, a media stream connection is indistinguishable from any other WebSocket connection served by them, so we can use all available options to send data to connected clients, such as #transmit or ActionCable.server.broadcast. All we need to do is prepare the message contents like Twilio expects.

The Twilio documentation has us covered. Here’s the format of the media message from the client to the server:

{

"event": "media",

"streamSid": "MZXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX",

"media": {

"payload": "<base64 encoded raw audio>"

}

}This is how we can send this kind of message from our channel class in response to the “subscribe” command:

class Twilio::MediaStreamChannel < ApplicationChannel

GREETING = "Hi, let's see what's on your plate..."

def subscribed

# ...

payload = generate_twilio_audio(GREETING)

transmit({

event: "media",

streamSid: stream_sid, # provided via connection identifiers

media: {

payload:

}

})

end

endAnd that’s it! Our Go code already knows what to do and transmits the message’s contents directly to the media stream socket.

Yet, the question remains: how to generate the audio? And yeah, that’s a tricky one.

To turn text into speech, we can use the OpenAI Audio API. With the ruby-openai gem, the corresponding code looks like this:

audio = client.audio.speech(

parameters: {

model: "tts-1-hd",

input: phrase,

voice: "echo",

response_format: "pcm"

}

)We request the PCM format because we don’t need any headers or other meta information—just the raw audio bytes. However, sending what we’ve obtained from OpenAI to Twilio as-is wouldn’t work; Twilio wants audio encoded using the mu-law algorithm. Moreover, we must provide the audio of the desired frequency, 8kHz, and OpenAI returns 24kHz.

As a result, the final audio payload generation code looks like this:

def generate_twilio_audio(input, voice: "alloy")

client.audio.speech(

parameters: {

model: "tts-1-hd",

input:,

voice:,

response_format: "pcm"

}

).then { resample_audio(_1) }

.then { G711.encode_ulaw(_1).pack("C*") }

.then { Base64.strict_encode64(_1) }

end

def resample_audio(payload)

samples = payload.unpack("C*")

new_samples = []

# The simplest resampling algorithm: just drop samples.

# The quality turned out to be good enough for phone calls.

(0..(samples.size - 1)).step(3) do |i|

new_samples << samples[i]

end

new_samples

endThe G711 module is a Ruby port of the identically-named Go library I created with the help of AI (which took less time to do than finding an existing Ruby gem; maybe there isn’t one).

Once we know how to respond to phone calls with some generated audio, we can now move on to the next phase: handling user interactions.

Handling DTMF signals

“Please switch your phone into touch-tone mode and dial…”—do you remember something like this? Well, if you do, let’s leave this mechanism in the past, because we’re not asking our users to do any manual switching. (Unless, perhaps, we’re actually trying to receive phone calls from the past 🤔)

Telephones have been capable of sending audio waves (or pulses) over the wire and air and digital signals for many years (more precisely, since 1963). This technology is called dual-tone multi-frequency signals or DTMF. This allows us to can receive user keypad events that indicate which number or symbol has been pressed.

And Twilio supports DTMF signals! So, we only need to propagate them to our backend application to process them.

Conveniently, we can do this via the AnyCable interface for performing channel actions:

func (ex *Executor) HandleCommand(s *node.Session, msg *common.Message) error {

// ...

if msg.Command == DTMFEvent {

dtfm := msg.Data.(DTMFPayload)

ex.performRPC(s, "handle_dtmf", map[string]string{"digit": dtfm.Digit})

return nil

}

// ...

}

func (ex *Executor) performRPC(s *node.Session, action string, data map[string]string) (error) {

data["action"] = action

payload := utils.ToJSON(data)

identifier := channelId(s)

_, err := ex.node.Perform(s, &common.Message{

Identifier: identifier,

Command: "message",

Data: string(payload),

})

return err

}The “action” field of the Perform message payload corresponds to the channel class method name. Let’s implement it:

class Twilio::MediaStreamChannel < ApplicationChannel

def handle_dtmf(data)

digit = data["digit"].to_i

broadcast_log "< Pressed ##{digit}"

todos, period =

case digit

when 1 then [Todo.for_today, "today"]

when 2 then [Todo.for_tomorrow, "tomorrow"]

when 3 then [Todo.for_week, "this week"]

end

return unless todos

phrase = if todos.any?

"Here is what you have for #{period}:\n#{todos.map(&:description).join(",")}"

else

"You don't have any tasks for #{period}"

end

transmit_message(phrase)

end

endSimply, simply, amazing! Do you realize what we’ve just accomplished here?! We’ve just reached a level of automation that’s only available since the 2010s!

😁 All jokes aside, I do think DTMF is still applicable today. First of all, it can be your fallback option if your AI service isn’t working (or if you’ve burnt all your tokens/dollars).

Second, you can also use it for security purposes: for instance, by asking customers to first enter their PIN and only then, assuming a successful match, initialize an AI agent session. In other words, this mechanism prevents uninvited strangers from talking to the AI.

Real(-time) conversation via OpenAI

Okay, let’s actually start playing with the new and shiny things.

OpenAI recently announced their new API offering: Realtime API (which is still in beta). This API is specifically designed for voice applications; you set up a bi-directional communication channel with the LLM model (over WebSockets), send audio chunks, and receive AI-generated responses as audio and text.

Compared to previous solutions, the significant change here is that there is no intermediate speech-to-text phase; you can send user audio directly to LLM.

Wait, what? If the user talks directly to the LLM, how can we turn this conversation into an interaction with our system? You’ll learn how soon!

For now, let’s prepare the grounds and integrate OpenAI Realtime sessions into our Go application.

Initializing OpenAI sessions

As we mentioned above, OpenAI uses WebSockets in real time for communication. Thus, we want to create a new AI WebSocket connection as soon as we authorize the media stream in order to start sending audio to LLM.

We must also provide OpenAI credentials to initiate a connection and configure an OpenAI session (to specify audio codecs and other settings).

Now, when building applications with AnyCable, we try to delegate as much logic as possible to the main app (in our case, the Rails app). This way, we keep the realtime server as “dumb” as possible, meaning we can launch it once and forget it; this approach also makes it reusable, universal.

With this in mind, we’ll use our MediaStreamChannel class to configure OpenAI sessions. To do that, we again leverage the AnyCable Perform interface:

func (ex *Executor) HandleCommand(s *node.Session, msg *common.Message) error {

// ...

if msg.Command == StartEvent {

// Channel subscription logic

// If subscribed successfully, initialize an AI agent

ex.initAgent(s)

return nil

}

// ...

}

func (ex *Executor) initAgent(s *node.Session) error {

// Retrieve AI configuration from the main app

res, err := ex.performRPC(s, "configure_openai", nil)

// We send configuration as a JSON string

var data OpenAIConfigData

json.Unmarshal(res.Data, &data)

conf := agent.NewConfig(data.APIKey)

agent := agent.NewAgent(conf, s.Log)

// KickOff establishes an OpenAI WebSocket connection

agent.KickOff(context.Background())

// Keep the agent struct in the session state for future uses

// (i.e., to send audio or to terminate the agent)

s.WriteInternalState("agent", agent)

return nil

}The corresponding channel code looks like this:

class Twilio::MediaStreamChannel < ApplicationChannel

def configure_openai

config = OpenAIConfig

reply_with("openai.configuration", {api_key: config.api_key})

end

endThe technical implementation of the #reply_with method is beyond the scope of this blog post. All we need to know is that it allows us to send data back to the Go application (not to the media stream connection, like #transmit).

Additionally, besides the response payload, we also provide an event identifier, which can be used to differentiate payloads and simplify deserialization on the Go side.

Now, let’s take a look at the agent implementation itself. We’ve put it into a separate Go package to make it less dependent on Twilio (and for the sake of the separation of concerns principle). Here’s the code:

package agent

type Agent struct {

conn *websocket.Conn

sendCh chan []byte

log *slog.Logger

}

func NewAgent(c *Config, l *slog.Logger) *Agent {

return &Agent{

// ...

}

}

func (a *Agent) KickOff(ctx context.Context) error {

// Prepare connection parameters

url := a.conf.URL + "?model=" + a.conf.Model

header := http.Header{

"Authorization": []string{"Bearer " + a.conf.Key},

"OpenAI-Beta": []string{"realtime=v1"},

}

// Establish a WebSocket connection

conn, _, err := websocket.DefaultDialer.Dial(url, header)

a.conn = conn

// Send session.update message to configure the session

sessionConfig := map[string]interface{}{

"type": "session.update",

"session": map[string]interface{}{

"input_audio_format": "g711_ulaw",

"output_audio_format": "g711_ulaw",

"input_audio_transcription": map[string]string{

"model": "whisper-1",

},

},

}

configMessage := utils.ToJSON(sessionConfig)

a.sendMsg(configMessage)

// Set up reading and writing go routines

go a.readMessages()

go a.writeMessages()

return nil

}

type Event struct {

Type string `json:"type"`

}

func (a *Agent) readMessages() {

for {

_, msg, err := a.conn.ReadMessage()

typedMessage := Event{}

json.Unmarshal(msg, &typedMessage)

switch typedMessage.Type {

case "session.created":

// many other event types

case "response.done":

case "error":

a.log.Error("server error", "err", string(msg))

}

}

}

func (a *Agent) writeMessages() {

for {

select {

case msg := <-a.sendCh:

if err := a.conn.WriteMessage(websocket.TextMessage, msg); err != nil {

return

}

}

}

}

func (a *Agent) sendMsg(msg []byte) {

a.sendCh <- msg

}The code above demonstrates the basics of creating and interacting with a WebSocket client (that is, reading and writing messages).

The most significant bit there is the session configuration: first, we must ensure that the correct audio format is specified (“g711_ulaw”) so the in and out streams are compatible with Twilio Media Streams. Second, we need to enable input audio transcription (using the “whisper-1” model). After all, it’s hard to imagine a use case where you don’t need to know what the user said. Plus, we’ll use transcriptions for debugging purposes.

In the readMessages function, we first extract the event type to later add specific handlers for specific events.

With that, we’ve configured the AI agent and associated it with the media stream. It’s now time to do something about audio channels!

Connecting the streams

Now, with the agent ready, we can, naturally, send it user audio and receive audio responses!

On the Twilio executor side of things, we need to propagate audio packets to the agent:

func (ex *Executor) HandleCommand(s *node.Session, msg *common.Message) error {

// ...

if msg.Command == MediaEvent {

twilioMsg := msg.Data.(MediaPayload)

// Ignore robot streams

if twilioMsg.Track == "outbound" {

return nil

}

audioBytes := base64.StdEncoding.DecodeString(twilioMsg.Payload)

ai := ex.getAI(s)

ai.EnqueueAudio(audioBytes)

return nil

}

}The code above is pretty self-explanatory. So, next up, let’s look at what happens on the agent side:

func (a *Agent) EnqueueAudio(audio []byte) {

a.buf.Write(audio)

if a.buf.Len() > bytesPerFlush {

a.sendAudio(a.buf.Bytes())

a.buf.Reset()

}

}

func (a *Agent) sendAudio(audio []byte) {

encoded := base64.StdEncoding.EncodeToString(audio)

msg := []byte(`{"type":"input_audio_buffer.append","audio": "` + encoded + `"}`)

a.sendMsg(msg)

}Note that we’re not immediately sending audio packets (Twilio transmits one after 20ms) and instead buffering them first. This way, we reduce the throughput of outgoing messages.

We now need to figure out how to send OpenAI responses to the media stream. So, we’ll introduce callbacks for communication in the opposite direction: one to handle audio responses from OpenAI and another to handle transcripts. On the agent side, we need to define the corresponding event handlers:

func (a *Agent) readMessages() {

for {

// ...

switch typedMessage.Type {

case "response.audio.delta":

var event *AudioDeltaEvent

json.Unmarshal(msg, &event)

a.audioHandler(event.Delta, event.ItemId)

case "conversation.item.input_audio_transcription.completed":

var event *InputAudioTranscriptionCompletedEvent

_ = json.Unmarshal(msg, &event)

a.transcriptHandler("user", event.Transcript, event.ItemId)

case "response.audio_transcript.delta":

var event *AudioTranscriptDeltaEvent

json.Unmarshal(msg, &event)

a.transcriptHandler("assistant", event.Delta, event.ItemId)

case "response.audio_transcript.done":

var event *AudioTranscriptDoneEvent

json.Unmarshal(msg, &event)

a.transcriptHandler("assistant", event.Transcript, event.ItemId)

}

}

}Now, let’s attach the handlers in the executor:

func (ex *Executor) initAgent(s *node.Session) error {

// ...

agent := agent.NewAgent(conf, s.Log)

agent.HandleTranscript(func(role string, text string, id string) {

ex.performRPC(s, "handle_transcript", map[string]string{"role": role, "text": text, "id": id})

})

agent.HandleAudio(func(encodedAudio string, id string) {

val := s.ReadInternalState("streamSid")

streamSid := val.(string)

s.Send(&common.Reply{Type: MediaEvent, Message: MediaPayload{Payload: encodedAudio}, Identifier: streamSid})

})

// ...

return nil

}Transcripts are sent to the backend application (as the #handle_transcript method calls), and audio contents are sent directly to the media stream socket.

Hey, we can now actually talk with our AI agent over the phone!

But what are we going to talk about? The weather? Pets? The point is, we’re not just trying to build a voice interface to ChatGPT, we’re crafting a specialized assistant. So, let’s continue.

Prompts and tools

The first step towards AI personalization is coming up with a prompt. The prompt is the heart (or soul?) of your AI agent. We must create a personality, provide clear instructions, and (especially important in our case), introduce some guardrails.

Since we’re streaming AI-generated audio directly to a human customer, we must be very cautious about what is being said. We don’t want the AI to ask people, “What’s the name of your pet?” (true story), or discuss other questionable topics.

Technically speaking, you could send generated audio only after you’ve analyzed the output transcript and ensured it’s not harmful. However, doing this could demand a noticeable amount of time and make the conversation less realistic.

That said, OpenAI allows you to specify instructions for the session (and even update them during its lifetime) via the same session.udpate event. We can populate them during our #configure_openai step:

def configure_openai

config = OpenAIConfig

reply_with("openai.configuration", {api_key: config.api_key, prompt: config.prompt})

endThe changes on the Go side are similar to the one above:

sessionConfig := map[string]interface{}{

"type": "session.update",

"session": map[string]interface{}{

+ "instructions": a.conf.Prompt,

"input_audio_format": "g711_ulaw",

"output_audio_format": "g711_ulaw",

"input_audio_transcription": map[string]string{

"model": "whisper-1",

},

},

}Note that we’re using a static prompt which is the same for all users. However, it might be helpful to populate the instructions with some personalized information or context. For instance, for our application, we might include the list of incomplete tasks in the instructions so the AI could talk about them without using any additional tools.

Wait, tools? Yes, let’s take a look at our prompt first:

You are a voice assistant focused solely on weekly planning and task management.

Your only purpose is to help users manage their todos within the app.

Core functions:

- Browse tasks (today, tomorrow, this week)

- Add new tasks

- Mark tasks complete

Response rules:

- Keep responses under 2 sentences

- Always use function calls for actions

- Confirm actions with brief acknowledgments

- Stay strictly within app features

Do not:

- Suggest features not in the app

- Discuss topics unrelated to tasks/planning

- Give advice beyond task management

- Engage in general conversation

- Make promises about future features

- Explain your limitations or nature

Example responses:

- "You have no tasks today. Congrats!"

- "Added 'Dentist appointment' to Thursday. Need anything else?"

- "Task marked complete. You have 4 remaining today."As you can see, we strongly working to convince the AI to stay within the constraints and capabilities of the application.

Further, to serve a particular user’s needs, we can provide functions (“Always use function calls for actions”). OpenAI Realtime allows you to specify a list of functions that can be invoked by the assistant to fulfill tasks or gather additional information—and this is how we can open the door to our application for the AI agent.

Similarly as we do with instructions, we provide the configuration of a tool (in JSON Schema format) as a part of the session.update payload:

def configure_openai

config = OpenAIConfig

tools = [

{

type: "function",

name: "get_tasks",

description: "Fetch user's tasks for a given period of time",

parameters: {

type: "object",

properties: {

period: {

type: "string",

enum: ["today", "tomorrow", "week"]

}

},

required: ["period"]

}

},

{

type: "function",

name: "create_task",

description: "Create a new task for a specified date",

parameters: {

# ...

}

},

{

type: "function",

name: "complete_task",

description: "Mark a task as completed",

parameters: {

# ...

}

}

].to_json

reply_with("openai.configuration", {api_key: config.api_key, prompt: config.prompt, tools:})

endThen, whenever the agent wants to use a tool, it sends us a response.output_item.done event with the function_call item; and we delegate the function call to the main app:

// pgk/agent/agent.go

func (a *Agent) readMessages() {

for {

// ...

switch typedMessage.Type {

case "response.output_item.done":

var event *OutputItemDoneEvent

json.Unmarshal(msg, &event)

item := event.Item

if item.Type == "function_call" {

a.functionHandler(item.Name, item.Arguments, item.CallID)

}

}

}

}

func (a *Agent) HandleFunctionCallResult(callID string, data string) {

item := &Item{Type: "function_call_output", CallID: callID, Output: data}

msg := struct {

Type string `json:"type"`

Item *Item `json:"item"`

}{"conversation.item.create", item}

encoded := utils.ToJSON(msg)

a.sendMsg(encoded)

// Send `response.create` message right away to trigger model inference

a.sendMsg([]byte(`{"type":"response.create"}`))

}

// pgk/twilio/executor.go

agent.HandleFunctionCall(func(name string, args string, id string) {

res, err := ex.performRPC(s, "handle_function_call", map[string]string{"name": name, "arguments": args})

if res != nil && res.Event == "openai.function_call_result" {

agent.HandleFunctionCallResult(id, string(res.Data))

}

})All that’s left is to implement the #handle_function_call method in the channel class:

def handle_function_call(data)

name = data["name"]

args = JSON.parse(data["arguments"], symbolize_names: true)

case [name, args]

in "get_tasks", {period: "today" | "tomorrow" | "week" => period}

range = case period

when "today"

Date.current.all_day

when "tomorrow"

Date.tomorrow.all_day

when "week"

Date.current.all_week

end

todos = Todo.incomplete.where(deadline: range).as_json(only: [:id, :deadline, :description])

reply_with("openai.function_call_result", {todos:})

in "create_task", {date: String => deadline, description: String => description}

todo = Todo.new(deadline:, description:)

if todo.save

reply_with("openai.function_call_result", {status: :created, todo: todo.as_json(only: [:id, :deadline, :description])})

else

reply_with("openai.function_call_result", {status: :failed, message: todo.errors.full_messages.join(", ")})

end

in "complete_task", {id: Integer => id}

todo = Todo.find_by(id:)

if todo

todo.update!(completed: true)

reply_with("openai.function_call_result", {status: :completed})

else

reply_with("openai.function_call_result", {status: :failed, message: "Task not found"})

end

end

endOur integration is now complete! This means that, from now on, we only need to touch our main application (Rails) to change the instructions or modify the set of tools. Meanwhile, the Go service (AnyCable) requires just some occasional maintenance.

Voice UI demo

You can find all the source code on GitHub: anycable/twilio-ai-demo. Feel free to fork and star!

Closing thoughts and future ideas

AI-based voice assistants are going to become more and more popular. Just think about potential use cases: booking a stylist or a doctor appointment, reporting a lost credit card, making dinner reservations, and the list repeats.

Thankfully, thanks to the LLM evolution, building AI-based voice agents today is less like rocket science and more like everyday task (maybe let’s just call it “phone science”? Sure sounds less intimidating!)

Sure, we’ve been able to provide automated support to customers for many years with just algorithms. However, AI humanizes the user experience to the next level. AnyCable plays a similar role for engineers, allowing you to offload all the low-level functionality to a separate logic-less server so you can focus on your product needs and continue building within your existing application.

The technology has also reached the phase where it can handle boring tasks pretty well. AI can deal with regional accents and different languages; if not confident enough, it automatically asks for user confirmation before performing a task—no code required!

Feel free to grab our demo application and try to integrate the proposed concepts into your application. The Go component can be used with any backend (after all, it is AnyCable); you just need to implement AnyCable RPC interface. You can also use our Rails implementation as a reference.

Bonus: Ruby metaprogramming vs. OpenAI tools

This a special section for my fellow Rubyists and those who still don’t understand why (and how) it keeps us happy.

The code we wrote for the OpenAI tools, schema generation, and function call handling, well, it makes me sad. The amount of boilerplate is far beyond what I expected (to be fair, I do have high standards). If I wanted to write like this, I’d have chosen Go (oh, wait…).

Whenever I’m designing Ruby APIs, I evaluate the result by asking, “Is the amount of code here about the same as the amount of plain text required for a human being to solve or understand this task?” This kind of thinking helps to keep pushing the boundaries.

Regarding the OpenAI tools, it’s clear to me that providing a list of Ruby methods with descriptions is enough for a human to compile a schema in their brain. So, I started looking for ways to achieve this with Ruby. Thus, I came up with the following code:

def configure_openai

# ...

tools = self.class.openai_tools_schema.to_json

reply_with("openai.configuration", {api_key:, voice:, prompt:, tools:})

end

def handle_function_call(data)

name = data["name"].to_sym

return unless self.class.openai_tools.include?(name)

args = JSON.parse(data["arguments"], symbolize_names: true)

result = public_send(name, **args)

reply_with("openai.function_call_result", result)

end

# Fetch user's tasks for a given period of time.

# @rbs (period: (:today | :tomorrow | :week)) -> Array[Todo]

tool def get_tasks(period:)

range = case period

when "today"

Date.current.all_day

when "tomorrow"

Date.tomorrow.all_day

when "week"

Date.current.all_week

end

{todos: Todo.incomplete.where(deadline: range).as_json(only: [:id, :deadline, :description])}

end

# Create a new task for a specified date

# @rbs (deadline: Date, description: String) -> {status: (:created | :failed), ?todo: Todo}

tool def create_task(deadline:, description:)

todo = Todo.new(deadline:, description:)

if todo.save

{status: :created, todo: todo.as_json(only: [:id, :deadline, :description])}

else

{status: :failed, message: todo.errors.full_messages.join(", ")}

end

end

# Mark a task as completed

# @rbs (id: Integer) -> {status: (:completed | :failed), ?message: String}

tool def complete_task(id:)

todo = Todo.find_by(id: id)

if todo

todo.update!(completed: true)

{status: :completed}

else

{status: :failed, message: "Task not found"}

end

endNo more manual tools schema generation. You just add a Ruby method, mark it as a tool, provide an optional description, an RBS type signature, and the tool configuration is created for you automatically. And it’s always in sync with your methods!

The code for this version lives in a separate branch.

📞 TTYL!