Midjourney vs. human illustrators: has AI already won?

In the age of artificial intelligence the ability to generate realistic images from text prompts is no longer a distant futuristic concept—it’s a present-day reality. So, is it time for designers to delegate some (or all) of their work to AI? In this article, we’ll pit human illustrators against AI artists and try to judge if we’ve already passed the point of no return. We’ll provide the same prompts and guidelines to both human and AI and compare the resulting illustrations side by side.

Other parts:

- Midjourney vs. human illustrators: has AI already won?

- Midjourney vs. human illustrators II: more Martians join the battle!

In the world of digital illustration, quickly and accurately generating complex 3D images has always been a challenge. But with the newest versions of text-to-image AI models such as Midjourney, DALL-E, and Stable Diffusion, this process can become significantly easier and more efficient.

Let’s briefly overview each of these models.

Popular text-to-image AI models

DALL-E is a second-generation product developed by the nonprofit OpenAI (backed by Elon Musk, amongst others). It’s trained on millions of stock images and, in my view, is superior to the others for creating photorealistic results.

Stable Diffusion, introduced in August 2022, is an open-source project, that allows users to download and run its software locally. Of the AI image generators mentioned, Stable Diffusion has had the greatest market impact, allowing creators to retrain a model for a particular task and sell it as a service.

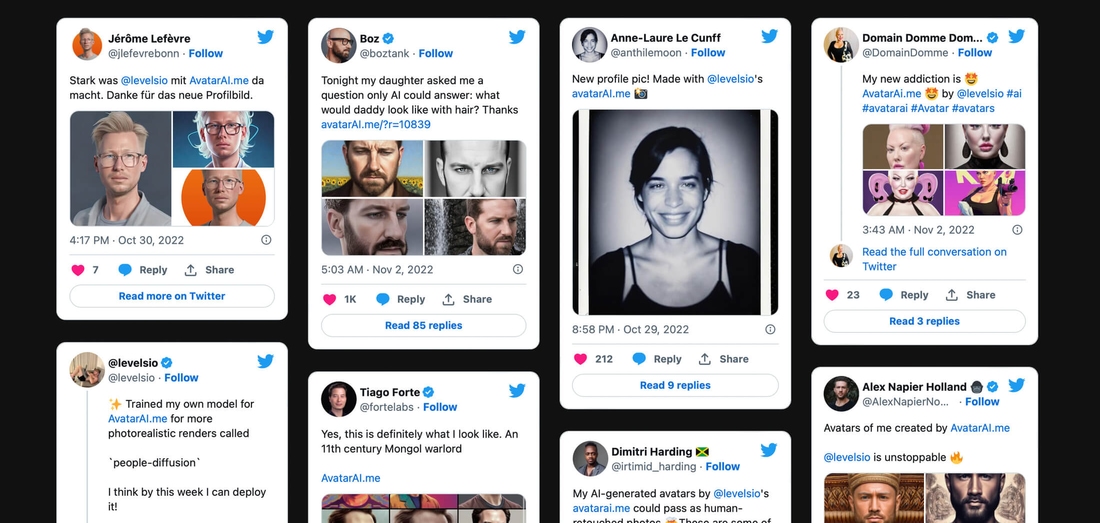

Avatarai.me allows you to quickly generate nice profile pics with Stable Diffusion

Midjourney is a startup founded by David Holz that focuses on the art community. From my point of view, it offers the wildest creative options of these models. Further, with its alpha iteration of version 4 (released in November 2022) it began to produce astonishingly high-quality results—almost indistinguishable from that of a real person.

The technology is there, it’s accessible, and the results are remarkable. As a designer, it’s worth addressing the elephant in the room: is it time to finally delegate part of my work to AI?

Introducing our experiment

For this challenge, we’ll use Midjourney. I instantly had a task in mind that would lend itself well to the human-AI comparison: almost every week, our blog (which you’re reading right now) publishes articles, each of which demands a unique, thematically appropriate illustration. These are created by own our designers or one of several freelance illustrators. (All of these designers, crucially, are humans.)

My responsibilities include art direction: proposing concepts, correcting compositions if needed, and ensuring the technical parameters are met. A number of illustrators have been working with us for quite some time, so, in general, the art direction is minimal. However, this process still takes 1–2 weeks on average. During this time, we have to passively wait for sketches and renders.

These illustrations also have a certain style: we try to ensure that 90% of the covers are made in a 3D isometric view, have a fairly “plastic” and vivid look, and a solid background. The typical illustration size is 1200×1000 pixels. Let’s take these constants as our technical parameters for our experiment.

A classic illustration for the Evil Martians blog

As for the concepts: we’ll use three illustrations already created for our blog. Specifically, we’ll copy the original request we sent to our human illustrators and try using it as a prompt for Midjourney.

Metrics

In order to make some kind of ultimate judgement, we’ll have to set some parameters we use to properly evaluate the result. To that end, I came up with the following list:

- Active time spent on the art direction for illustrator vs. neural network: concept refinements and composition adjustments for the artist, prompt tweaks for Midjourney.

- Overall creation time, from request to final result.

- A comparison of approximate financial costs.

- Which cover pic ultimately looks more appealing?

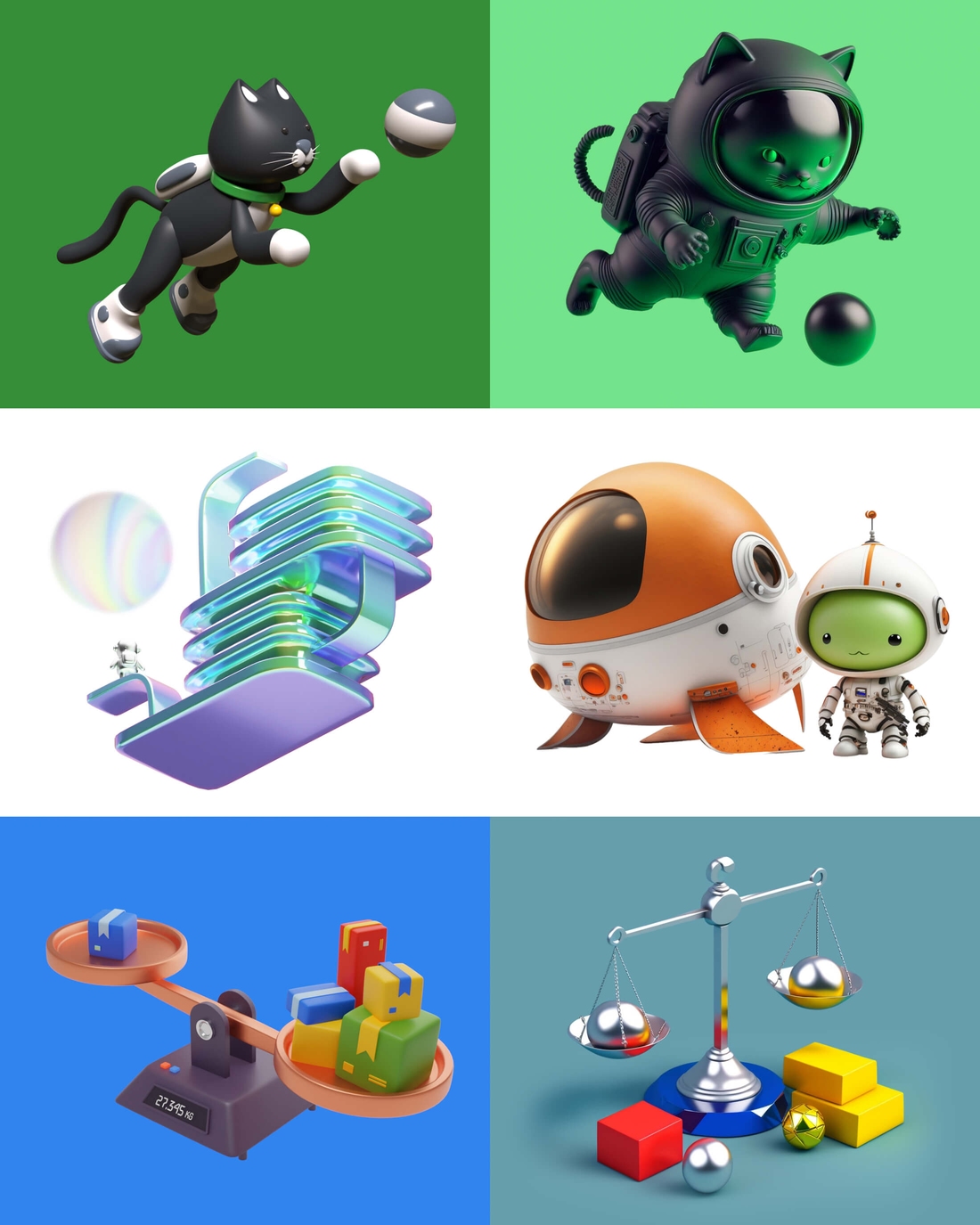

Task №1: a cat and a ball

Human artist

The request we sent to the illustrator:

“We’re writing an article about migrating from CircleCI to GitHub Actions. Their logos are a circle and a cat (see attachments). So this is the idea—the cat is playing with a frisbee, ball, or other round object. Can you design the cutest kitty in the world? You can even make it a little Martian if you want.”

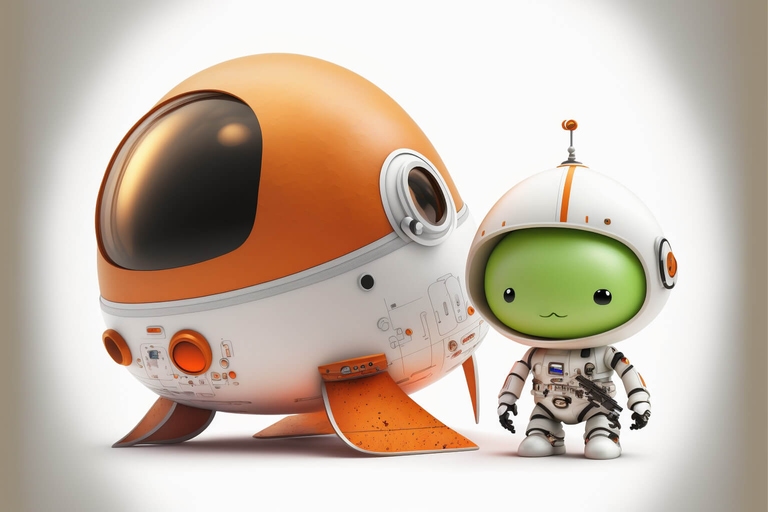

After 4 days, the artist sent fairly detailed renders, designed in a beautiful “toy” style and meeting all the technical parameters:

We took the green version with the ball. The only request was to slightly change the tail’s shape and the size of the cat’s muzzle. The result came back the next day; everyone loved it. In all, the process took 6 days, with a half hour—maximum—of active art direction.

AI artist

With Midjourney, everything starts with a prompt you send to a dedicated Discord chat:

/imagine: pixar style 3d image of the cat in a spacesuit playing with a ball on solid green background --ar 3:2 --v 4

The last two commands are settings: ar is aspect ratio, and v 4 will use the newest version of Midjourney for highest quality results. pixar style 3d indicates the style I’d like to refer to.

Here’s the result, which I got back immediately:

OK, this looks amusing, but our classic “plastic” style is missing. Let’s add the word “plastic” into the prompt: pixar style 3d of a toy plastic cat in a spacesuit playing with a ball on solid green background --ar 3:2 --v 4

Now it’s definitely plastic looking, but it looks kinda scary—and some body parts are missing.

Let’s repeat the prompt, but try to make the cat black and more reminiscent of the GitHub mascot: pixar style 3d of a black toy plastic cat in a spacesuit playing with a ball on solid green background --ar 3:2 --v 4

I repeated the prompt several times, but it seems to me that the composition always lacked dynamics. Let’s try to add some words indicating motion: plastic toy cat in a spacesuit jumping and playing with a ball, black on solid green background, Pixar style isometric 3d render --ar 3:2 --v 4

Now we’re talking! The composition looks interesting and quite dynamic. The word “jumping” immediately added the missing momentum. The only thing left is to generate some variations:

And we’ll upscale the one I like:

Midjourney is not very good dealing with the kind of uniform backgrounds our blog requires, so I’ll use one more AI tool named Remove.bg to finalize the image. And here we are! It took me about an hour and half of active time to end up here.

Task №2: a Martian spaceship

Human artist

As with the previous image, we suggested an idea, but this time it was more vague:

“Hi! We’ll have a big event soon: our new website release. Along with the release, we want to publish an article about our inspirations and how we created its design. For the illustration, we’d like: a white background, possibly with color spots in the center, and a white object on it, or an iridescent object on pure white. For the concept: something about the new Martian starship, bright and shiny.”

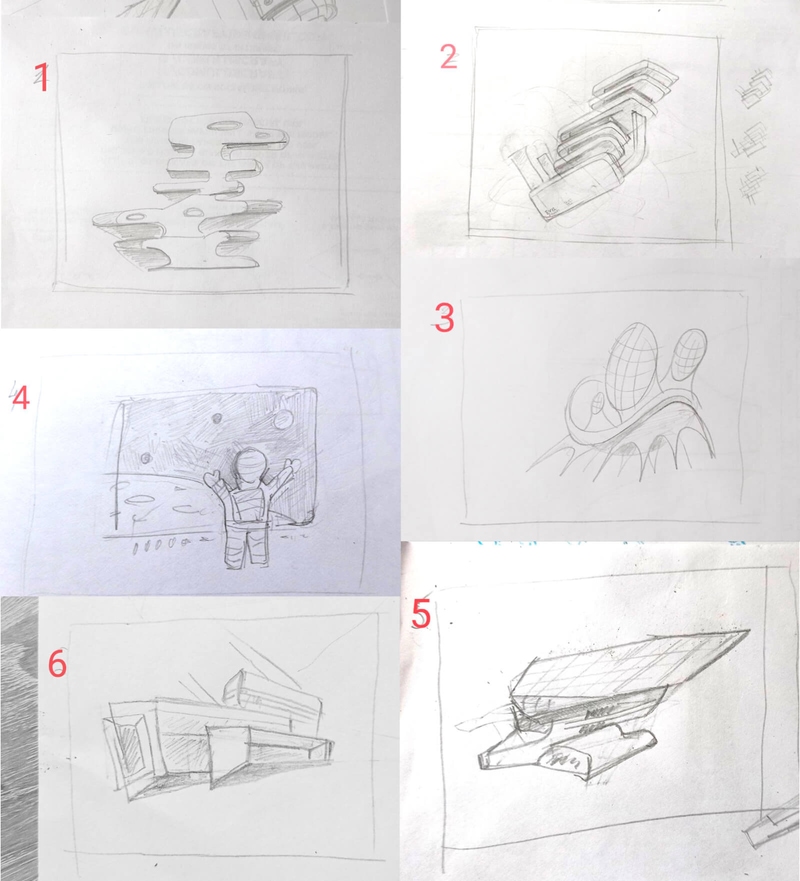

After 8 days (there was no rush with this article), the illustrator sent sketches of various spaceships in a futuristic style and one version “from inside the spaceship.”

We choose options 2 and 5 for further fine-tuning. After another 5 days, the illustrator sends well detailed renders:

After choosing the spaceship and experimenting with planet shape, we ended up with the final result. It took 2 weeks, but only about an hour of active art direction time.

AI artist

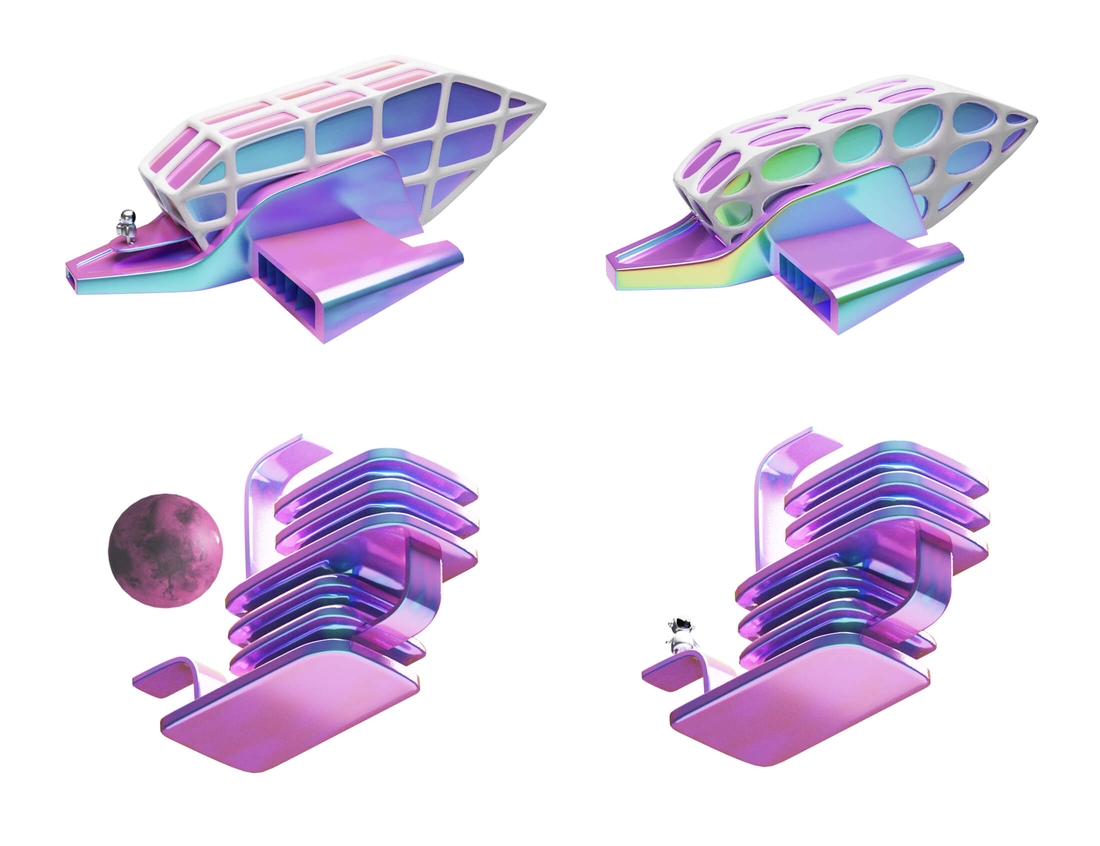

/imagine: pixar style 3d composition of the martian spaceship and tiny astronaut pearlescent on white background --ar 3:2 --v 4

This time I was immediately impressed with the first result! I like how the spaceship recalls a “Finding Nemo” character, and I think this illustrates the idea of a new Martian starship quite nicely. That said, it’s not white or pearlescent, but I still like it.

Let’s upscale the most promising one:

Light upscale redo option can help to get rid of unnecessary datalization:

After applying Remove.bg the image looks ready to be published. The entire process took me 20 minutes of active time. That was fast!

Task №3: a scale of balance

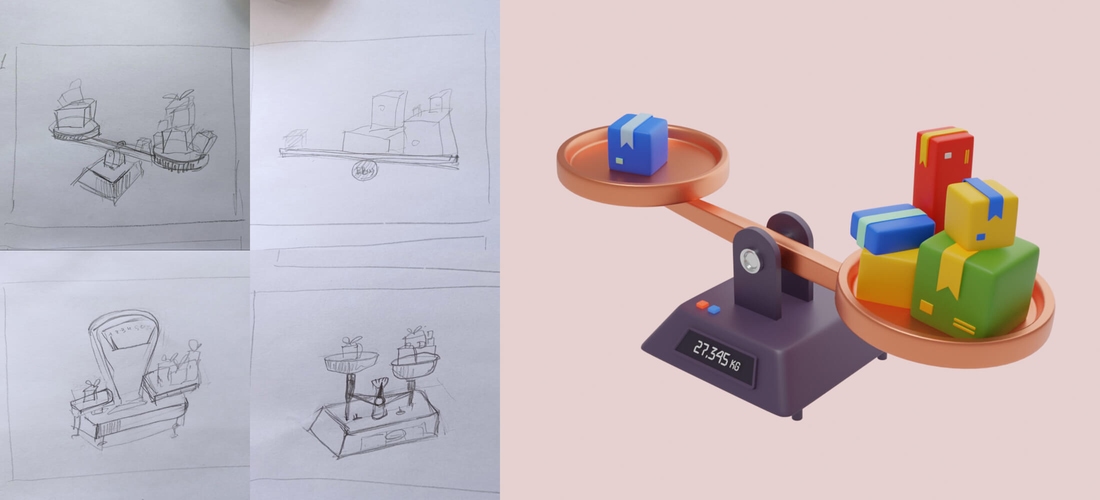

Human artist

“We need an illustration for a new article. It’s about a project we had that helps eBay sellers trade on large European marketplaces and earn more profits. I have a couple of ideas so far. The first one is about two cylinder hats, one smaller, one bigger. The rabbit jumps into the bigger and richer one. The second is about a scale or balance, with a few goods (parcels, gifts) on one side and many of them on the other.”

Our artist liked the second idea, and in 6 days we got back first sketches. It took 3 more days to create the render:

We only asked to change the background color to the one associated with the brand. It was ready on the next day. This took 10 days in total, with half an hour of active art direction time.

AI artist

/imagine: plastic toy balance scales with one parcel on one side and multiple parcels on the other, in greens, yellows and red, solid blue background, Pixar style isometric 3d render --ar 3:2 --v 4

This prompt sounds like it would be pretty easy, doesn’t it? But oddly enough, for the AI, it wasn’t. I kept repeating the prompt over and over again changing the types of things on the scales, but kept getting surreal configurations:

For some reason, the AI only started getting realistic scales when I specified that the plastic toy should be silver. Apparently, in the AI’s mind (…?) this configuration somehow made sense. The combination of scales and gifts, unfortunately, still did not:

After two and a half hours of back and forth with the AI, I was completely exhausted and decided to just upscale the most promising result. This time, the background was already uniform, so no background removal needed.

The “render” quality is decent, but the whole idea is missing

Summing up the problems

After conducting this experiment, I found that the more complex and well-planned the concept, the less effective the AI was at creating the desired illustration. While it performed well with a simple subject, like a cat, with more intricate and detailed illustrations, we ended up with some Kafka-esque outcome.

With Midjourney, you can’t just “make the ball bigger” or “make the character smile”—you have to change the entire image. Solving this problem by making collages out of multiple results is an option, but where is the line between drawing the illustration yourself and manually correcting the AI’s work?

AI is not a magic box that immediately gives you the “perfect” image you’re after. Instead, good images come about as the result of a long dialogue between a human and AI. The human must also have a good understanding of art style and a bit of creative vision.

Another issue is that there is still no good solution for animation (at least none that I am aware of). If you want a smoothly animated cover for an article like this one, only a human artist will suffice.

The score

Let’s weigh the pros and cons to make our final decision and calculate the costs. Which of the following pictures do you prefer?

The cover illustrations for our blog posts created by human designers are on the left, and those generated with Midjourney on the right

Time requirements

Designing illustrations with Midjourney took between 20 minutes and 2.5 hours of active art direction time per illustration. It’s possible to achieve a decent result quickly—but it’s also possible that the result could be even better given more time.

Working with human artists took anywhere from 6 days to 2 weeks, but only required about 0.5–1 hour of active art direction time per illustration.

It’s a classic trade-off: you can either trust everything to a skilled artist or carefully oversee every step of the AI’s process. Personally, I would choose the first option.

Financial costs

In terms of financial costs, the AI predictably comes out ahead. A standard individual membership for a month only runs $30, and the resulting images can be used however you’d like. (However, if you’re using the images as an employee of a company that makes more than $1 million per year in revenue, you’ll need to purchase a “Corporate” plan for $600 per year.)

We spend about $600 per month on illustrations by human artists. The economic difference is obvious.

Who comes out on top?

As a result of the experiment, we found that both human and AI artists excelled at certain tasks. Thus, we’re considering using Midjourney in the following scenarios:

- When a Martian designer a.k.a. a “prompt engineer” has some free time to actively generate images.

- When the idea for an illustration is simple and doesn’t involve multiple objects with complex interactions between them.

- When we need an illustration quickly and can’t wait for real 3D renders to be approved and ready.

🤖 Finally, a disclaimer: this article was partially written by AI, and the cover image was generated by AI as well.

AI is still no substitute for Evil Martian expertise! We’re here to help. Do you have a web or mobile application that requires expert problem solving with product design, frontend, backend, or software reliability? Looking to get your project off the ground? Reach out to us!