So, your developers use AI now—here's what to know

AI has quickly reshaped software development, but the benefits are still quite foggy. So, if you’re working with developers who regularly use AI to speed up their work, what can you actually expect of them? Can AI truly transform us all into “10x engineers” or is this just a pipe dream? This post has research-backed answers to help set reasonable expectations when dealing with AI-assisted developers.

TLDR: in reality, productivity boosts average 30-40% under certain (quite limited) circumstances, and can even be negative.

Hire Evil Martians

Martians amplify your project to launch your product in weeks, not months.

What the studies say

Not long ago, GitHub conducted research measuring developer productivity. Data was gathered both via questions and by tracking performance on a certain task (implementing an HTTP server). Some interesting outcomes:

- 60-75% stated that they feel more satisfied and fulfilled when working with AI assistants

- 87% said that they conserved mental energy when working on repetitive tasks

- 88% reported that they feel more productive with AI

When GitHub measured task completion speed, the group that was allowed to use AI finished 55% faster on average, with 8% more completions. Sounds good!

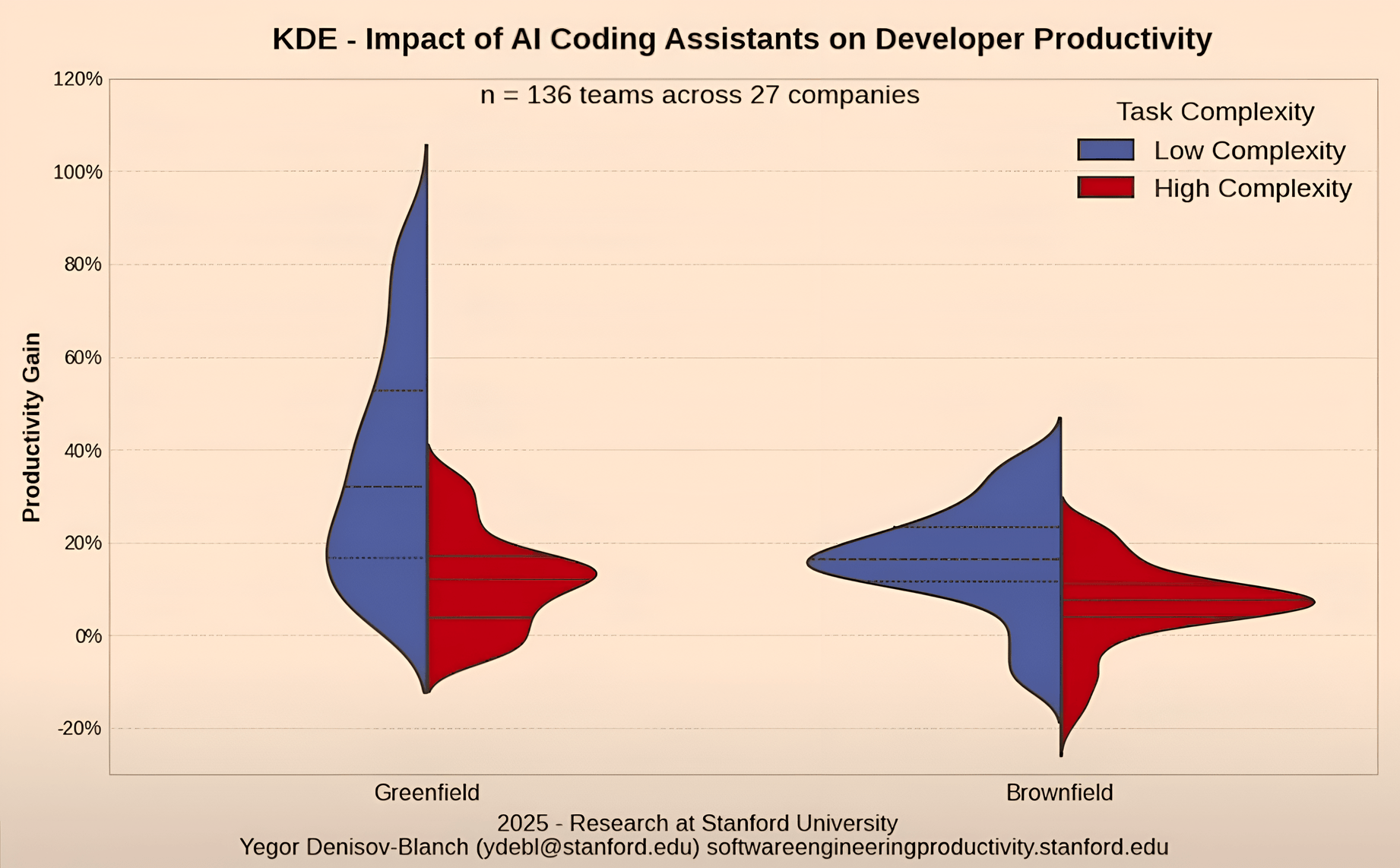

Next, let’s look at this absolute gem from researchers at Stanford: Does AI Actually Boost Developer Productivity?. I firmly believe this is one of the most important (if not the most important) bit of research out there on this. If you have 18 minutes to spare, watch it!

Frankly speaking, any single slide from this one could be framed and hung on your wall; but let’s focus on 2 points for our purposes:

Takeaways:

- The greatest productivity boost is reached in low-complexity tasks within greenfield projects (that is, new, started from scratch)

- For mature projects, the speed increase drops to 0-40%

- Also for mature projects, there’s a higher chance of getting a negative result. In other words, using AI can actually slow you down

This data is fairly representative (136 teams across 27 companies), so it would be quite reasonable to think that we can, more or less, safely extrapolate this to the majority of the industry.

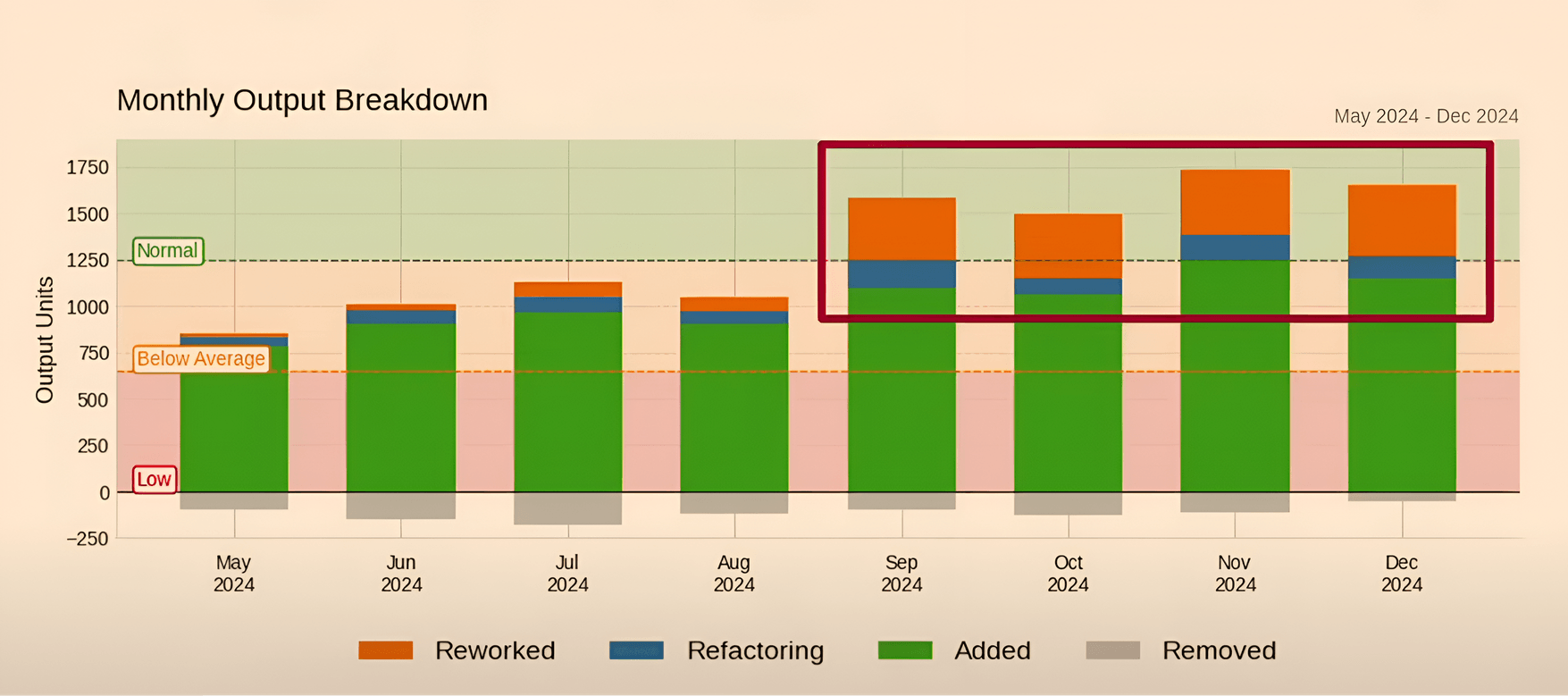

But we’re not finished yet. Let’s also have a look at this:

While AI does produce more code in general, it also produces more code which has to be reworked later (not to be confused with simple refactoring). This can be because it did not meet quality standards, was not extensible, has security or performance flaws, and so on. So, while we may end up getting more code shipped, we also ship more code that we’ll have to rewrite later.

Analyzing the numbers leaves us with a simple question: why is it only a 30-40% productivity boost under some conditions, when an engineer’s primary function is to write code? If an engineer’s main workflow is getting automated, shouldn’t the average profit be at least 2x, even if, sadly, 10x seems unreachable?

An engineer’s goal is not writing code

If you take away a single thing from this article, let it be the following:

An engineer’s goal isn’t to write code, it’s to bring business value!

Yes, sometimes this means writing code (and a lot of it). But almost all of the time, the most important decisions actually lie outside of code. On that note, here is a great article about 100x engineers that is relevant to this.

They’re able to cut the right 5% of the feature to simplify its implementation by 100x.

Juraj Masar

Founder & CEO at Better Stack

While writing code is the most straightforward (and seemingly valuable) activity (you need to ship an actual app to start getting money), there are activities far more important and far more time-sensitive.

For instance:

- Exploring the customer and their idea of the project and the market

- Understanding project needs and selecting the most suitable set of technologies and planning architecture

- Understanding which features are table stakes and which should be cut from MVP to get things shipped faster

- Brainstorming ideas, looking for alternatives, collaborating with designer to find the perfect middle ground between awesome and easy-to-implement design…

The list goes on and on but the main principle remains the same.

An engineer’s role isn’t to ship as much code as possible. It’s to bring as much value to business as possible within given constraints.

And sure, it’s true that we engineers should prioritize speed, especially when working with early-stage startups. But this isn’t about the speed of writing code, rather, the focus should be the speed of fulfilling business needs. This can include quick prototyping, setting up for the quick feedback loop, deeper research and so on.

And yes, sometimes that means sitting down and writing thousands of lines of code.

But sometimes it means convincing the team that this or that feature has terrible value/time-taken-to-implement ratio, and it should not be coded at all.

What is AI best used for

If you’ve recently used AI assistants and agents, you can confirm the following two simple cases where we see the biggest productivity boosts:

- The average AI model outperforms you in a given field. Let’s say you never touched Go and have never written GitHub actions. Even if AI is absolutely mediocre in this task, its level is still marginally better than that of someone with zero relevant experience

- A task can be heavily patternized e.g. when following a given pattern, writing some generic boilerplate or following a strict non-forgiving guideline

This is especially noticeable in tasks which are simple, tedious and repetitive, but can be easily understood and patternized:

- Writing stories for Storybook: when given even a single example, an LLM can easily follow the format and add stories for all components

- Migrating data from one format to another: e.g. when we replaced style object with Tailwind classes and then all places like that require same updates

- Writing tests (under supervision, of course)

And so on. In other words, the better the examples and description for the task, the better AI handles its implementation. This has led to creation of a new workflow called “spec-driven development”, where you interact with the agent in a loop, describing tasks in a well-understood format (usually .md), iterating and tracking progress, refining requirements (which the agent can do itself by talking to you).

Bottlenecks and hiccups

But as with every technology, there are tradeoffs and a silver bullet has just not been discovered yet. When working with LLMs, it’s important to know what things you need to pay extra attention to.

First, when AI writes good portion of your code, your focus naturally slides towards reviewing. This is logical: now instead of writing code you have to review everything the LLM has generated.

And I can’t stress this enough: if you want your project to succeed long term, you can neither avoid having thorough reviews, nor assign it to another LLM (like Copilot agent in GitHub). You are the person responsible for making sure AI code satisfies all the required standards and contributes towards long-term project health.

Can AI write perfect code every time which we can ship without checking? At this point in time: no. But, again, neither can humans. Maybe some time in the future there will be super-advanced AGI which will show us the full limits of engineering capabilities. But (un)fortunately, technologies are not there yet.

Second, productivity boosts gradually decline with project size and rarity of the technologies. AI has a lot of training data, but the more popular a technology is, the bigger its share in training data. In other words: AI is good at writing React code with popular libraries. But it won’t perform as efficient when trying to deliver code in Lua or Rust. There are ways to mitigate this: you can provide the LLM with a documentation (for example - here are the docs for Svelte) and it can help a lot. But it would still not be nearly as efficiently as having widely adopted technology, such as Next.js.

Last, but not least: some workflows amount to almost no benefit from LLMs. For example, imagine if a client came for a short optimization sprint, with only 2 weeks to illuminate and eliminate the problem. This often requires deep analysis, delivering some refactoring and making updates to the architecture to ensure such problems won’t arise in the future. Usually only big projects suffer from performance problems of this scale. And if we want to leverage AI for this sprint, we will have to:

- Read the codebase, understand and adopt code style, patterns, rules

- Add rules for LLM to act upon, so that it would follow the project guidelines

- Every time it spits out code, you need to make sure it did not break anything and everything works as expected-because you are not yet super familiar with the codebase

- Your first iteration is inevitably flawed, so you will have to iterate on steps 2-3 until you get the result

- But by doing so, you spend time instead of saving it. Sure, it would be good in the long run, but you have only 2 weeks

In other words, for some small scoped tasks LLM can deliver more harm than good.

How to reach maximum productivity boost when using AI

Honestly, this section could be an entire article (or even a book). Let’s just shrink it to a few quick principles:

- Start from scratch. AI excels at one-shotting simple prorotypes and writing a ton of boilerplate from the ground up. The bigger your project is, the more work you will have to do to make it behave within given constraints

- And with a template and a set of rules (

AGENTS.md,.cursor/rulesand so on). Before you begin you should have FULL picture of how your project should look, starting from code formatting and general code style and ending with architecture and used technologies. Your job is to pave the way for LLM, not let it stray away. - Use popular technologies. React/Next and TypeScript? Nice choice; there’s a lot of this code in training data and AI knows what to do. OCaml? Not so much. Tailwind + Shadcn: good choice. New UI library which came out yesterday: not so much

- Use defaults. Most of the, let’s say, Tailwind code was written with default config-and you don’t want AI to confuse itself trying to understand your custom config

- Leverage a faster feedback loop-let AI face the issue as soon as possible. Use linter rules to highlight code style errors, use strictest and most well-typed solution (for example-

@tanstack/routerhas really good path typing) whenever possible. That way LLM will stumble upon the issue as soon as possible, and won’t spend much time working on flawed implementation - Make sure it has all relevant data added to the context-for example, in Cursor you can attach files via

@and say “follow the same structure as in this implementation of X, but now for Y”

A productive conclusion

No matter how much we want it, the technologies are not really there yet to make everyone a 10x engineer. BUT, total productivity boost can reach 30-50% under some circumstances:

- Within a known field and general expertise in the area

- Using a popular tech stack

- For small to mid-sized projects

- If an engineer knows when not to use AI and just do it by hand